New design guide: VMware NSX with Cisco UCS and Nexus 7000

Back in September 2013 I wrote a piece on why you would deploy VMware NSX with your Cisco UCS and Nexus gear. The gist being that NSX adds business agility, a rich set of virtual network services, and orders of magnitude better performance and scale to these existing platforms. The response to this piece was phenomenal with many people asking for more details on the how.

The choice is clear. To obtain a more agile IT infrastructure you can either:

- Rip out every Cisco UCS fabric interconnect and Nexus switch hardware you’ve purchased and installed, then proceed to repurchase and re-install it all over again (ASIC Tax).

- Add virtualization software that works on your existing Cisco UCS fabric interconnects and Nexus switches, or any other infrastructure.

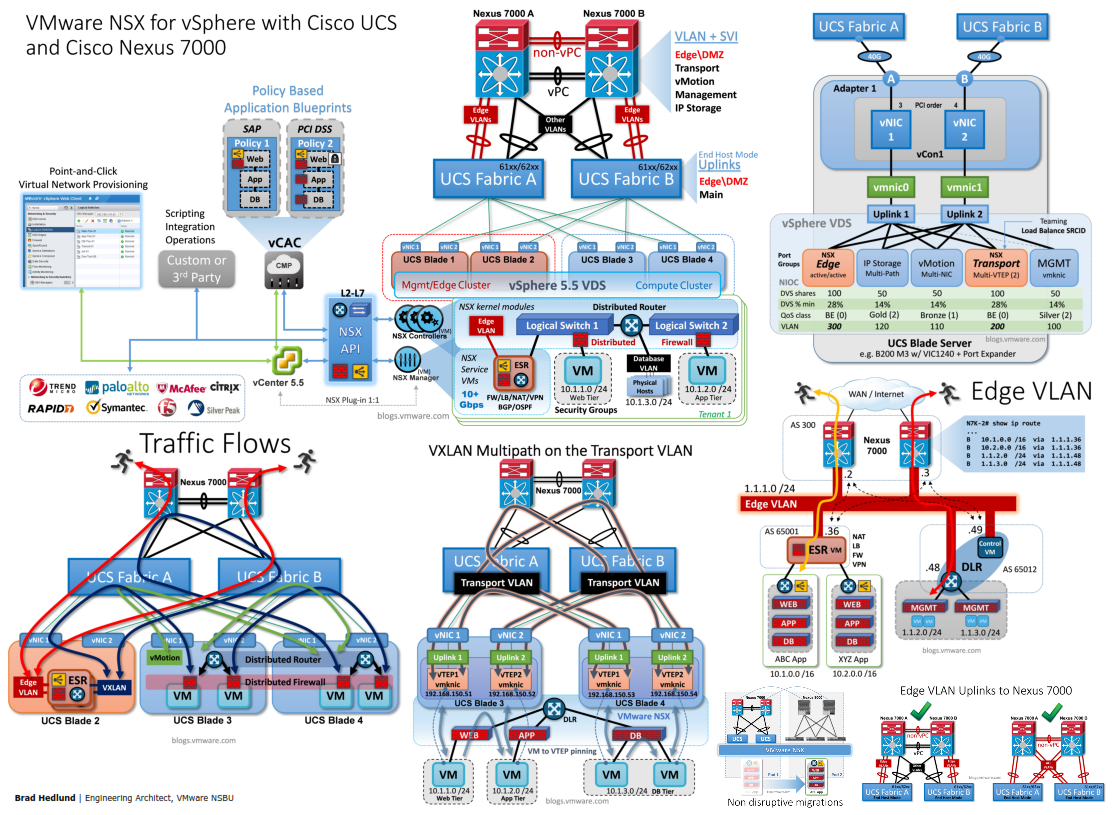

To help you execute on choice #2, we decided to write a design guide that provides more technical details on how you would deploy VMware NSX for vSphere with Cisco UCS and Nexus 7000. In this guide we provide some basic hardware and software requirements and a design starting point. Then we walk you through how to prepare your infrastructure for NSX, how to design your host networking and bandwidth, how traffic flows, and the recommended settings on both Cisco UCS and VMware NSX. As a bonus there is 48 x 36 poster that includes most of the diagrams from the guide and some extra illustrations.

Download the full design guide here (PDF)

Download the 48 x 36 poster here (PDF)

Enjoy!

Cheers, Brad