Mind blowing L2-L4 Network Virtualization by Midokura MidoNet

Today there seems to be no shortage of SDN start-ups, chasing the OpenFlow hype in one way or another aiming to re-invent the physical network – SDpN (software defined physical network). And then there’s a rare breed out there. Those solving cloud networking problems entirely with software at the virtual network layer (hypervisor vswitch) – SDvN (software defined virtual network). Nicira was one of those rare breeds (look what happened) – and now it’s apparent to me that Midokura with their MidoNet solution is another one of those rare breed SDvN start-ups like Nicira, but with what appears to be a differentiated and perhaps even more capable solution.

I first learned of MidoNet from a mind-blowing post on Ivan Pepelnjak’s blog. I had to read that post a few times before I really “got it” – the first clue you’re looking at a rare breed. And like my first light bulb moment with Nicira, I couldn’t stop thinking about MidoNet for weeks afterwards.

Here’s my understanding of the MidoNet solution based on my own conversations with Dan Mihai Dumitriu (CTO) & Ben Cherian (CSO) of Midokura, with some answers to questions I had after reading Ivan’s post.

L2-L4 Network Virtualization

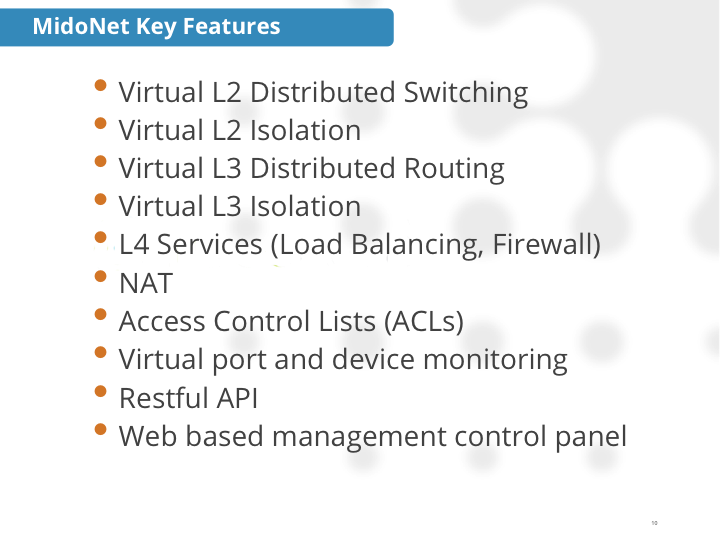

We already know that a cluster of vswitches can behave like a single multi-tenant virtual L2 network switch. This is a well understood and accepted technology which has been further improved upon with L2 Network Virtualization Overlays (NVO) pioneered by Microsoft VL2 and Nicira NVP. Now imagine that very same cluster of vswitches (NVO) behaving like a single, multi-tenant, virtual L3 router. The technology that revolutionized L2 networking in the cloud has moved up the OSI model to include L3 & L4. What was once bolted-on and kludged together is now literally built-in as a cohesive multi-layer solution.

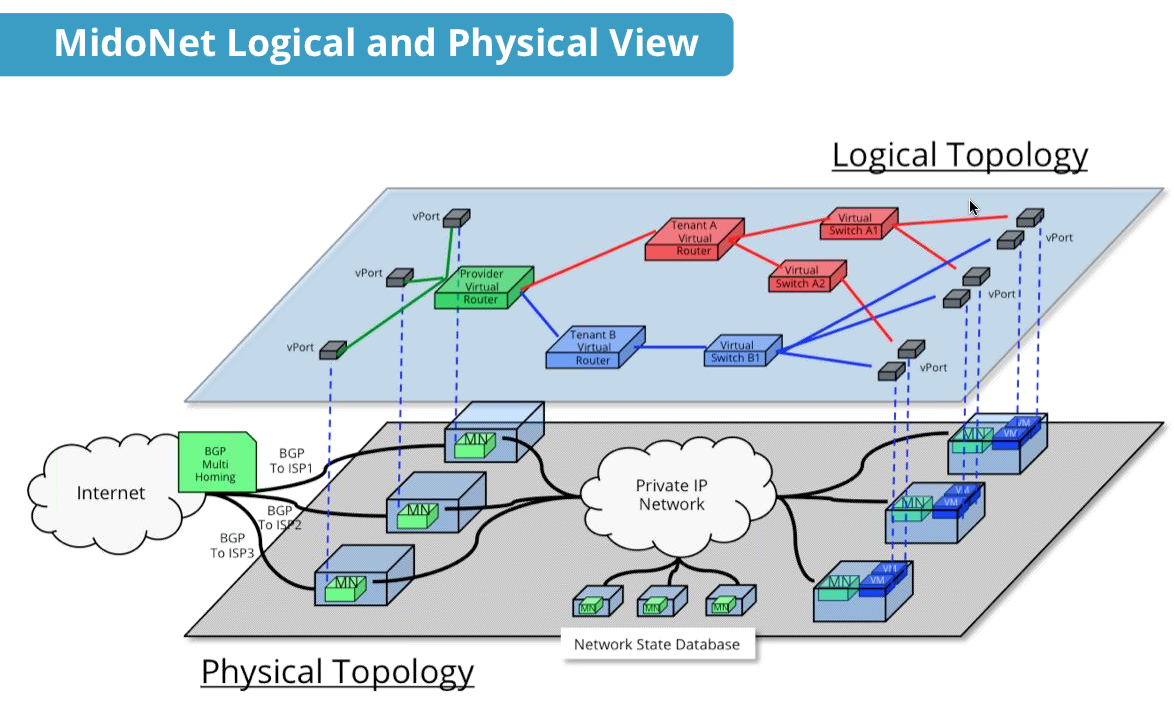

Even more interesting, with MidoNet you can have x86 machines collectively behave as one virtual “Provider Router” interfacing with other routers outside of the cloud with regular routing protocols. These machines provide the “Edge” gateway functionality (which I wrote about here) for the North/South traffic to/from our cloud. On these Edge machines one NIC faces the outside with standard packet formats and protocols. The other NIC faces the inside with pick your favorite tunneling protocol. No real need for hardware VXLAN or anything like that from a switch vendor.

Distributed L4 Service Insertion

We typically think of the vswitch as being a basic and dumb L2 networking device – forcing our hand into designing complex, fragile, and scale challenged L2 physical networks. However that’s not the case with SDvN solutions such as MidoNet (and Nicira). The networking kernel bits providing basic machine network I/O (and the basic L2 between two VMs) are the very same kernel bits that can also function as our gateway router, load balancer, firewall, NAT, etc.

The next trick is to get the L4 services configuration and session state distributed and synchronized across all machines. If you can do that, the L4 network services required ( eg. firewall and load balancing) can be executed on the very machine hosting the VM requiring those services. And Midokura has done just that. With MidoNet, the days of complicating your network design for the sake of steering traffic to a firewall or load balancer are gone. And all the usual spaghetti mess flows and service insertion bandwidth choke points are gone too. You can begin to see why I’m an unapologetic Network Virtualization fan boy.

Decentralized Architecture

Each host machine is running the Open vSwitch kernel module. This is the packet processing engine (the fast path data plane) fully capable of L2-L4 forwarding. You can think of this as the (software) ASIC of your host machine vswitch. By itself the software ASIC is useless. As with a physical switch, the ASIC needs to be programmed with a configuration and flow table by a control plane CPU. And that control plane CPU can be off-box and centralized somewhere (Nicira), or it can be distributed and exist individually and locally on each host (MidoNet).

Each host machine runs the MidoNet agent (MN) which operates on a local copy of the virtual network configuration, and the agent acts upon that configuration when a packet is received on ingress from a vPort (the logical network interface that connects to a virtual machine, or a router for example). Think of the local MidoNet agent as the control plane CPU for the local vswitch on that host. Any special network control packets received on a vPort, such as ARP requests, will be handled and serviced locally by the MidoNet agent. No more ARP broadcasts bothering the network.

Similarly, L2-L4 forwarding lookups and packet processing (NAT, encap, etc.) is handled locally by the MidoNet agent based upon its local copy of the virtual network configuration. And as this happens the MidoNet agent builds the flow state on its local data plane (the Open vSwitch kernel module). The host vswitch data plane does not rely on some nebulous central controller for programming flows – the local agent handles that. This is one significant point of differentiation between Midokura MidoNet and Nicira (VMware).

MidoNet’s decentralized flow state architecture demonstrates that this solution was built for scalability and robustness. It takes some serious talent in distributed systems to pull that off – which Midokura has. Their technology team is a who’s-who roster of ex-Amazon and ex-Google.

Fully Distributed

MidoNet centralizes the virtual network configuration state and stateful L4 session state on a “Network State Database” – and even then, this database is a distributed system itself. The Network State Database uses two different open source distributed systems platforms. Any stateful L4 session state (NAT, LB, security groups) is kept in an Apache Cassandra database, while everything else is stored by an Apache Zookeeper cluster (ARP cache, port learning tables, network configuration, topology data, etc.). The virtual network configuration is logically centralized but still physically distributed. In a normal production deployment, the Network State Database will operate on its own machines, apart from the machines hosting VMs or interfacing with routers.

Each MidoNet agent has a Cassandra and Zookeeper client that reads the virtual network configuration and L4 session state from the Network State Database, with the result being that all host machines have the same virtual network configuration and session state. But this is a cloud, and things change. Virtual machines move between hosts, new tenants are created, routers fail, etc. Such events are configuration changes. And to facilitate the synchronization of these configuration changes, MidoNet agents and the Network State Database communicate bidirectional for distributed coordination.

That’s the real “trick” – providing both a distributed and consistent virtual network configuration state across all machines.

Note: There’s no OpenFlow here at all – another point of difference from Nicira (albeit insignificant). That’s just the result of one being a centralized architecture (Nicira) and the other being distributed (MidoNet).

Symmetrical

As we’ve already discussed, the configuration state of the virtual network is the same on all machines – the entire L2-L4 configuration. Even a machine acting as an Edge “Provider Router” has the same network configuration and state information as the machines hosting VMs. And vice versa – a host machine has the L3 routing configuration and device state of an Edge machine. The configuration state is totally symmetrical.

There’s a significant and positive consequence to this – the virtual network traffic is also symmetrical and always takes the most direct path with no spaghetti mess of flows on the network. This is because each machine can act upon the full multi-tenant L2-L4 configuration/state on ingress – execute any required L2-L4 processing – and send the packet directly to the destination machine.

For example, if you have (3) “Provider Router” Edge machines, as shown in the diagram above, a packet could arrive from the “Internet” on any one of those machines, destined for a VM which could be on any one of the (3) host machines. Because the Edge machines have the same configuration/state information as the host machines, the Edge machine knows exactly which machine is hosting the destination VM and can send the packet directly to it. And vice versa. Maybe one of the (3) Edge machines loses its link to the “Internet” router. That’s a networking state change realized on all machines, including the host machines. And thus a host machine delivering a packet from a VM to the “Internet” would avoid sending traffic toward the affected Edge machine.

Note: I put “Internet” in quotes because what is shown as the “Internet” in the diagram above could just as well be the North/South data center routers.

Another example is the scenario where the Blue tenant is allowed to communicate with the Red tenant. Normally that traffic would need to go through multiple L3 hops through various routers and firewalls. Not here though – because each machine has the full L2-L4 configuration for all tenants, and can act upon it with its L2-L4 kernel module data plane. And as a result the traffic will be sent directly from the machine with the Blue tenant to the machine with the Red tenant – with all necessary L3-L4 packet transformations applied (NAT).

Tunneling

Packet delivery from one MidoNet machine to another is encapsulated into a tunneling protocol – any tunneling protocol supported by the Open vSwitch kernel module. At this time MidoNet is using GRE. It could be something else (CAPWAP, NVGRE, STT) if there’s a particularly good reason (better per flow load balancing on the physical IP fabric), but the tunneling protocol implemented is not the most interesting part of any Network Virtualization solution , despite playing an important role – like a cable. It’s how the system implements it configuration, control, and management planes that will differentiate it from others.

But of course the tunneling allows us to build our physical data center network fabric anyway we like. I personally like the Layer 3 Leaf/Spine architecture of fixed switches.

Hypervisors & Cloud Platforms

MidoNet supports the KVM hypervisor and Midokura is currently targeting the OpenStack and CloudStack cloud platforms. They have developed API extensions and plugins that snap MidoNet into the existing OpenStack architecture, both for the Essex release and the new Folsom release.

VMware is a non-starter (forget about it – don’t even ask). VMware is a closed networking platform and even more competitive to other network vendors now than ever before with the acquisition of Nicira.

One interesting hypothetical for me is Microsoft Hyper-V. Microsoft’s networking environment is not closed (yet) to the same degree as VMware. The Open vSwitch is written in C code, and could be ported into Windows Server 2012. Something tells me a team in Redmond has already tried that. So in theory MidoNet could (hypothetically) be a virtual networking solution for customers choosing to build their cloud with Microsoft. Or perhaps I should say… giving customers a better reason to choose Microsoft instead of VMware.

Tradeoffs

Midokura has done the heavily lifting – building a distributed system of MidoNet agents – which provides a solid foundation to deliver Network Virtualization beyond simple Layer 2, and in to the realm of distributed multi-tenant L3 and L4 service insertion. However, like anything else there are tradeoffs to the design choices you make. Compared to a more centralized model, the MidoNet agent on each host is a thicker footprint than a lighter-weight OpenFlow agent (Nicira).

Some of the natural questions we should be asking are things such as:

- What kind of resource drain is the MidoNet agent? CPU, Memory, Disk, Network I/O?

- How do the different L2-L4 features affect those host resources if and when configured?

- How does the size of the deployment relate to host resource consumption, if at all?

- What is the latency to replicate a configuration/state change to all hosts?

- Does the scale of the system impact the change latency?

- What kind of change rate can the system handle and what would be a “normal” change rate?

- Can the machines send data back to the Cassandra cluster for analytics?

- Can we have a configuration feedback loop based on such analysis?

- Can I “move” a virtual network environment from one data center to another?

Network Virtualization is Awesome

It’s amazing to me that with just some x86 machines and a standard Layer 3 IP fabric you can build an impressive IaaS cloud with fully virtualized and distributed scale-out L2-L4 networking. I’m a big fan (boy), and extremely intrigued by these rare breed solutions such as Midokura MidoNet and Nicira.

Cheers, Brad