Considering 10GE Hadoop clusters and the network

Does 10GE Hadoop make any sense? And if so, how might you design the cluster? Let’s discuss some rationale for and against 10gig Hadoop and then look at some potential network setups for 10G clusters. If you need a quick into or refresher, read this post on the basics of Hadoop clusters and the network, and then come back.

The rationale behind 1GE Hadoop

Hadoop was designed by a web company (Yahoo) out of necessity for efficiently storing and analyzing the inherently enormous data set of web scale applications. The assumption here is that your money-making application is going to have a large data set, like it or not. So the goal is pretty simple: improve the bottom line by building an infrastructure to handle your large data set at the lowest possible costs (expenses), with the best possible user experience (income). And it should scale. Need more data capacity and more bandwidth? No problem. You simply add more racks of low cost machines, growing the cluster horizontally as needed inside your warehouse sized data center. As such, the web scale Hadoop clusters can grow quite large (thousands of machines) so every incremental cost per machine adds up fast. Really fast. So the design philosophy here is to keep each machine and rack switch as cheap as possible, using commodity components wherever possible such as the built-in 1GE NICs (LOM), and 1GE rack switches. For these reasons, 10GE Hadoop usually doesn’t make much sense, or is very difficult to cost justify (but not always).

But Hadoop isn’t just for the web companies and warehouse sized data centers anymore. Other industries such as retail, banking & insurance, telco, governments, etc. are now using Hadoop to get more value out of the data they once threw away (or never made an attempt to collect). Here, the large data set may be, dare I say, optional, voluntary (that is, to the core business). The IT bottom line works a bit differently here and there isn’t a warehouse sized data center. For these enterprises, does 10GE Hadoop deserve a little more consideration? I think so. But as always the standard disclaimer applies, it depends.

The case for 10GE Hadoop

-

You’re not Yahoo. You’re an average enterprise with a modest (but growing) Hadoop deployment. You’re already deploying 10GE in other areas of the IT infrastructure (perhaps a private cloud) and keeping operations consistent and resources fungible counts for more than a modicum of sense. And the incremental cost of 10GE doesn’t have the same magnitude here as it does in larger clusters with thousands of nodes.

-

Space is a premium. You don’t have a warehouse sized data center. So you’d like the ability to scale your Hadoop deployment without eating up every last available rack in your modest data center.

-

Your Hadoop cluster runs a lot of ETL type workloads. So there’s a lot of intermediate data flying around the cluster from Mappers to Reducers and a lot of HDFS replication traffic between data nodes. Here, your cluster might run more efficiently with 10GE machines.

-

You’re deploying storage dense 2RU machines (such as the Dell PowerEdge C2100, or R720xd, with perhaps 24TB per node. One of these bad boys with 12-16 cores may need more network I/O than your typical 8 core 1RU 4TB machine. And when this node fails (or worse yet an entire rack) there’s a ton of data that needs to be re-replicated throughout the cluster.

-

You are Yahoo. You have a warehouse sized data center but it’s still not enough. You’re having difficulty getting so many machines within 300 meters of the cluster interconnect switches that its time to start scaling the nodes deep (see #4), because you’ve reached practical scale-out limits.

-

In a modest cluster the incremental cost of 10GE may not be that much more than 1Gig, and it gets cheaper with each passing day. 10G switch and NIC prices are falling fast, especially the basic switches and NICs. You don’t need fancy CNAs or DCB here.

The case against 10GE Hadoop

-

10GE NICs are still not widely available with LOM. It’ll take extra cost and effort to get your machines equipped with 10GE.

-

10GE ToR switches are about 3x the cost of a standard 1GE switch, port for port.

-

Your workloads don’t stress the network much. You’re jobs don’t move or change a lot of data. Rather, you’re counting and correlating instances of data within the data. So the machine to machine passing of data (intermediate data) is light so 1GE should work just fine.

-

There isn’t a lot of published data yet to suggest a clear cost/benefit case for 10GE Hadoop (if you’re aware of some data leave a note and link in the comments). The discussion is still largely theoretical at this point (as far as I can tell). On the other hand, the cost/benefit baseline of standard 1GE Hadoop is pretty well understood.

-

The money spent equipping each node and rack with 10GE could otherwise be spent on adding more nodes to your cluster. If your workloads are more CPU and storage bound than network (see #3), adding nodes & disks to your cluster makes more sense than adding network.

-

The performance of the cluster isn’t that important to you. You’re using Hadoop for offline batch processing, and if a given job finishes in 1 hour, or 20 minutes, nobody really cares that much.

Building a network for 10GE Hadoop

A really small 10G cluster (just one or two racks) could be as simple as having a pair of 10GE top of switches for say, 40 nodes. You’d link those two switches together with multi-switch LAG technology (shown here as VLT), have each node connect to both switches with a LAG, and then link your 10GE switches to the rest of network for external access (if needed, preferably with L3). Pretty straight forward. A nice switch for that would be the Dell Force10 S4810 line rate L2/L3 10G/40G switch. Sure, you could use any basic dirt cheap L2 switch here, but you need to consider what capabilities your ToR switch has that will allow you to elegantly scale beyond your first two racks (such as Layer 3 switching, auto provisioning, and Perl/Python operations).

Looking beyond your first two racks, you can use a cost-effective 10GE switch to provide the inter-rack cluster interconnect (Spine). You don’t need an expensive, monstrous, power sucking chassis switch for this. A low power, low cost, fixed switch will work just fine. Again, we can use the S4810 for that too. Shown below.

With a pair of 1RU switches acting as your 10GE Hadoop cluster interconnect you can realistically scale that to 160 dual attached nodes, assuming an over-subscription ratio of 2.5:1 between racks (line rate within the rack). Considering your 2RU nodes can have 24TB or more (each), you’re looking a cluster with well over 1 Petabyte of usable storage (after 3x replication). Not bad at all.

Because Hadoop does not require Layer 2 adjacency between nodes in the cluster, we’ll use good old fashion L3 switching between racks. It’s simple, well understood, and it scales. Pick your favorite routing protocol, OSPF, IS-IS, BGP, it doesn’t matter which.

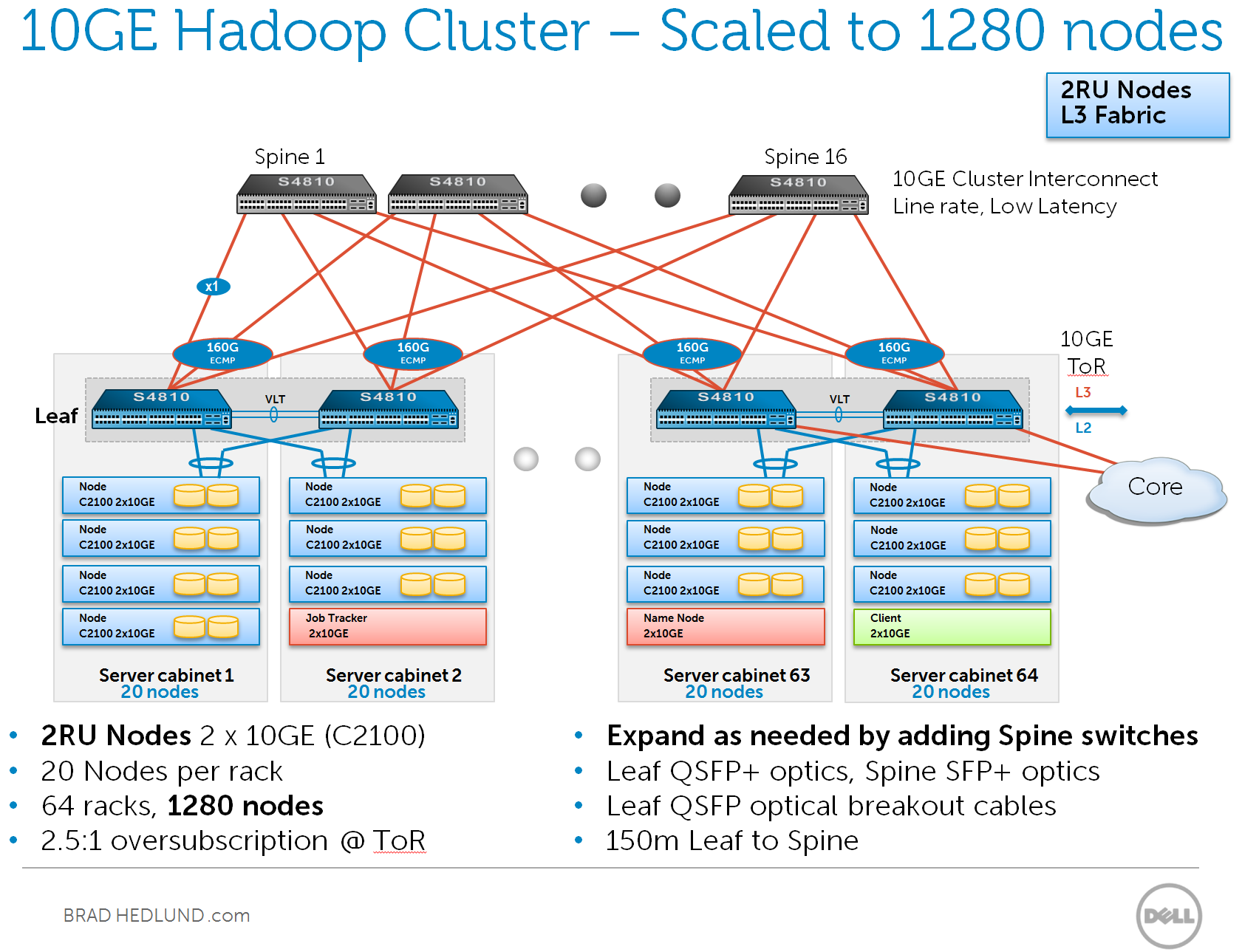

If 160 nodes isn’t enough, you can take the same architecture and scale it out to 1280 nodes by simply adding more of the same 1RU switches in the Spine layer, as needed, as your cluster grows, up to (16) Spine switches. Here, each ToR switch has 16x10GE uplink and connects 1x10GE to each Spine switch. This is possible because we chose to use Layer 3 switching for the cluster interconnect, so we’re not bound by the typical Layer 2 topology constraints. See below.

With larger Hadoop clusters such as this, you might consider connecting each node with a single link to a single switch to lower costs and double your potential cluster size. After all, with 64 racks, if one entire rack fails you’ve only lost a small fraction of the cluster resources. That might not be worth doubling up connections to each host for the sake of redundancy.

If you know you’re going to be building a fairly large 10GE Hadoop cluster from the onset, say, 150-300 nodes, you might want to consider building it with a 40G interconnect. Why? You’ll have fewer Spine switches, fewer cables from ToR to Spine, and fewer ports to configure in the network. Another big advantage here is that 40G optics are only about 1.8x more expensive than 10G optics. So as your cluster scales you’ll save a lot of money there.

Because of the 65% cost advantage with 40G optics, according to my math, any cluster of 320-640 nodes is more cost effective with 40G spine switches compared to building the same cluster with 10G Spine switches. Again, working with the same parameters of 2.5:1 over-subscription rack-to-rack, line rate in-rack.

Here again, you don’t need a monstrous power sucking chassis switch with expensive 40G linecards. Instead, you can use a comparatively low cost, low power, 2RU fixed switch such as the Dell Force10 Z9000 with 32 ports of 40G. See below.

With a 40G interconnect the same principles of scaling out the Spine layer apply here too. Using the S4810 ToR (Leaf) switches with 4 x 40G uplink, you can grow your 40G Spine from (2) switches to (4) and double the cluster size from 320 nodes to 640.

Should you need to connect your cluster to the outside world, the best place to do that is at the Leaf layer ToR switches. Using ports on your Spine switches for this will unnecessarily punish your cluster size, because every port on the Spine represents an entire rack of Hadoop nodes. Just grab a few ports on a pair of ToR switches and connect to the Core with a Layer 3 link, providing good isolation and security for your cluster. You can use BGP or even static routes here to keep your cluster’s internal routing protocol safely isolated from the outside.

So you’ve gone insane with 10G Hadoop and 640 nodes just isn’t enough for one cluster? No problem. Take those Z9000 switches in the Spine and use them as 128 x 10G switches, instead of 32 x 40G. Then take your S4810 ToR switches and uplink them with 16 x 10G. You’ll need a QSFP optical breakout cables for the ToR uplinks and Spine downlinks. Be sure to take note that using the breakout cable may reduce your 10G link distance to 100 meters.

You can scale out to (16) Z9000 switches in the Spine – because each ToR has (16) uplinks. And you can have (128) ToR switches in the cluster – because each Z9000 has 128 ports. Again, the scale-out capability is possible because we’re using good old fashion Layer 3 switching from the ToR to the interconnect. This would not be practical with a Layer 2 setup, unless you had TRILL, which isn’t really available yet, and it would be pointless anyway because the Hadoop nodes simply do not care about whether they’re a Layer 2 or Layer 3 hop away from the other nodes.

You can build clusters even larger than this by adding a 3rd stage to your fabric, where your ToR connects to a Leaf, and the Leaf connects to a Spine. In such case, cluster sizes of over 20,000 10GE hosts are possible using the same low cost, low power, 1RU and 2RU fixed switches.

For very large clusters such as this, or even small ones, having the ability to automate the switch deployment can be quite handy. Similar to how a server can bootstrap itself from the network, download an OS, retrieve configuration files from a CMDB, etc. the switches in the network should be capable of that too. In the case of the switches discussed here, you have that, and you also have Perl and Python scripting capabilities to customize operations with a familiar scripting language.

Summary

10GE is not the same slam dunk no-brainer for Hadoop as it may be for other things, such as dense server virtualization and IP Storage. For some, it just doesn’t make financial sense. For others, 10GE Hadoop will make sense for reasons such as supporting dense nodes, scaling with limited data center space, existing infrastructure and operations around 10GE, and network intense jobs hitting the cluster.

Just like you would with a 1GE cluster, you can start small and build out some pretty impressive clusters as you grow by using a scale-out architecture of low cost, low power, fixed 10G or 40G interconnect switches – and building the network with a standard Layer 3 switching configuration. You don’t need Layer 2 between the racks and you don’t need big expensive monstrous chassis switches to get the job done.

Cheers,

Brad

Disclaimer: The author is an employee of Dell, Inc. However, the views and opinions expressed by the author do not necessarily represent those of Dell, Inc. The author is not an official media spokesperson for Dell, Inc.