On data center scale, OpenFlow, and SDN

Lately I’ve been thinking about the potential applicability of OpenFlow to massively scalable data centers. A common building block of a massive cloud data center is a cluster, a grouping of racks and servers with a common profile of inter-rack bandwidth and latency characteristics. One of the primary challenges in building networks for a massive cluster of servers (600-1000 racks) is the scalability of the network switch control plane.

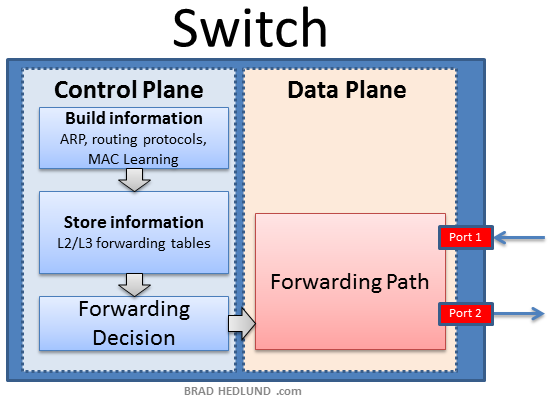

Simplistically speaking, the basic job of a network switch is to make a forwarding decision (control plane) and subsequently forward the data toward a destination (data plane). In the networking context, the phrase “control plane” might mean different things to different people. When I refer to “control plane” here I’m talking about the basic function of making a forwarding decision, the information required to facilitate that decision, and the processing required to build and maintain that information.

To make forwarding decisions very quickly, the network switch is equipped with very specialized memory resources (TCAM) to hold the forwarding information. The specialized nature of this memory makes it difficult and expensive for each switch to have large quantities of memory available for holding information about a large network.

Given that OpenFlow removes the control plane responsibilities from the switch, it also removes the scale problems along with it and shifts the burden upstream to a more scalable and centralized OpenFlow controller. When controlled via OpenFlow, the switch itself no longer makes forwarding decisions. Therefore the switch no longer needs to maintain the information required to facilitate those decisions, and no longer needs to run the processes required to build and maintain that information. All of that responsibility is now outsourced to the central over-aching OpenFlow controller. With that in mind, my curiosity is piqued about the impact this may have on scalability, for better or worse.

Making a forwarding decision requires having information. And the nature of that information is composed by the processes used to build it. In networks today, each individual network switch independently performs all three functions; building the information, storing the information, and making a decision. OpenFlow seeks to assume all three responsibilities. Sidebar question: Is it necessary to assume all three functions to achieve the goal of massive scale?

In networks today, if you build a cluster based on a pervasive Layer 2 design (any VLAN in any rack), every top of rack (ToR) switch builds forwarding information by a well-known process called source MAC learning. This process forms the nature of the forwarding information stored by each ToR switch which results in a complete table of all station MAC addresses (virtual and physical) in the entire cluster. With a limit of 16-32K entires in the MAC table of the typical ToR, this presents a real scalability problem. But what really created this problem? The process used to the build the information (source mac learning)? The amount of information exposed to the process (pervasive L2 design)? Or the limited capacity to hold information?

The OpenFlow enabled ToR switch doesn’t have any of those problems. It doesn’t need to build forwarding information (source mac learning) and it doesn’t need to store it. This sounds promising for scalability. But by taking such a wholesale ownership of the switch control plane, does OpenFlow create a new scalability challenge in the process? Consider that a rack with 40-80 multi-core servers could be processing thousands of new flows per second. With the ToR sending the first packet of each new flow to the OpenFlow controller, how do you build a message bus to support that? How does that scale over many hundreds of racks? Assuming 5,000 new flows per rack (per second) - across 1000 racks - that’s 5,000,000 flow inspections per second to be delivered to and from the OpenFlow controller(s) and the ToR switches. Additionally, if each server is running an OpenFlow controlled virtual switch, the first packet of the same 5,000,000 flows per second will also be perceived as new by virtual switch and again sent to the OpenFlow controller(s). That’s 10,000,000 flow inspections per second, without yet factoring the aggregation switch traffic.

In networks today, another cluster design option that provides better scalability is a mixed L2/L3 design where the Layer 2 ToR switch is only exposed to a unique VLAN for it’s rack only, with the L3 aggregation switches straddling all racks and all VLANs. Here the same MAC learning process is used only now the ToR is exposed to much less information, just one VLAN unique to the 40 or 80 servers in it’s rack. Therefore, the resulting information set is vastly reduced, easily fitting into typical ToR table sizes and virtually eliminates the ToR switch as a scaling problem. OpenFlow controlling the ToR switch here would not add much value here in terms of scalability. In fact, in this instance, OpenFlow may create a new scalability challenge that previously did not exist (10,000,000 flow inspections per second).

In either the pervasive L2 or mixed L2/L3 designs discussed above, the poor aggregation switches (straddling all VLANs, for all racks in the cluster) are faced with the burden of building and maintaining both Layer 2 and Layer 3 forwarding information for all hosts in the cluster (perhaps 50-100K physical machines, and many more virtual). While the typical aggregation switch (Nexus 7000) may have more forwarding information scalability than a typical ToR, these table sizes too are finite, creating scalability limits to the overall cluster size. Furthermore, the processing required to build and maintain the vast amounts of L2 & L3 forwarding information (e.g. ARP) can pose challenges to stability.

Here again, the OpenFlow controlled aggregation switch would outsource all three control plane responsibilities to the OpenFlow controller(s); building information, maintaining information, and making forwarding decisions. Given the aggregation switch is now relieved of the responsibility of building and maintaining forwarding information, this opens the door to higher scalability, better stability, and larger clusters sizes. However, again, a larger cluster size means more flows per second. Because the aggregation switch doesn’t have the information to make a decision, it must send the first packet of each new flow to the OpenFlow controller(s) and wait for a decision. The 5,000,000 new flows per second are now inspected again at the aggregation switch for potential total of 15,000,000 inspections per second for 5,000,000 flows through Agg, ToR, and vSwitch layers. How do you build a message bus to support such load? Should the message bus be in-band? Out-of-band? If out-of-band, how does that affect cabling and does it mean a separate switch infrastructure for the message bus?

One answer to reducing the flow inspection load would be reducing the granularity of what constitutes a new flow. Rather than viewing each new TCP session as a new flow, perhaps anything destined to a specific server IP address is considered a flow. This would dramatically reduce our 5,000,000 new flow inspections per second number. However, by reducing the flow granularity in OpenFlow we also equally compromise forwarding control of each individual TCP session inside of the main “flow”. This might be a step forward for scalability, but could be a step backward in traffic engineering. Switches today are capable of granular load balancing of individual TCP sessions across equal cost paths.

Finally, in networks today, there is the end-to-end L3 cluster design where the Agg, ToR, and vswitch are all Layer 3 switches. Given that the aggregation switch is no longer Layer 2 adjacent to all hosts within the cluster, the amount of processing required to maintain Layer 2 forwarding information is greatly reduced. For example, it is no longer necessary for the Agg switch to store a large MAC table, process ARP requests, and subsequently map destination MACs to /32 IP route table entries for all hosts within the cluster (a process called ‘ARP glean’). This certainly helps the stability and overall scalability of the cluster.

However, the end-to-end L3 cluster design introduces new scalability challenges. Rather than processing ARP requests, the Agg switch must now maintain L3 routing protocol sessions with each L3 ToR (hundreds). Assuming OSPF or IS-IS as the IGP, each ToR and vswitch must process LSA (link state advertisement) messages from every other switch in its area. And each Agg switch (straddling all areas) must processes all LSA messages from all switches in all areas of the cluster. Furthermore, now that the Agg switch is no longer L2 adjacent to all hosts, this also means the Load Balancers may not be L2 adjacent to all hosts either. How does that impact the Load Balancer configuration? Will you need to configure L3 source NAT for all flows? The Load Balancer may not support L3 direct server return, etc.

The problem here is not so much the table sizes holding the information (IP route table) as much as it is the processes required to build and maintain that information (IP routing protocols). Here again, OpenFlow would take a wholesale approach of completely removing all of the table size and L3 processing requirements. With OpenFlow, the challenges of Layer 2 vs. Layer 3 switching and the associated scalability trade-offs is irrelevant and removed. That’s good for scalability. However OpenFlow would also take responsibility for forwarding decisions, and in a huge cluster of millions of flows that could add new challenges to scalability (discussed earlier).

In considering the potential message bus and flow inspection scalability challenges of OpenFlow in a massive data center, one thought comes to mind: Is it really necessary to remove all control plane responsibilities from the switch to achieve the goal of scale? Rather than taking the wholesale all-or-nothing approach as OpenFlow does, what if only one function of the control plane was replaced, leaving the rest in tact? Remember the three main functions we’ve discussed; building information, maintaining information, making forwarding decisions. What if the function of building information was replaced by a controller, while the switch continued to hold the information provided to it and therefore make forwarding decisions based on the provided information?

In such a model, the controller acts as a software defined network provisioning system, programming the switches via an open API with L2 and L3 forwarding information, and continually updating upon changes. The controller knows the topology, L2 or L3, and knows the devices attached to the topology and their identities (IP/MAC addresses). The burdening process of ARP gleans or managing hundreds of routing protocol adjacencies and messages is offloaded to the controller, while the millions of new flows per second are forwarded immediately by each switch, without delay. Traditional routing protocols and spanning tree could go away as we know it, with the controller having a universal view of the topology, much like OpenFlow. The message bus between switch and controller still exists however the number of flows and flow granularity are of no concern. Only new and changed L2 or L3 forwarding information traverses the message bus (along with other management data perhaps). This too is also a form of Software Defined Networking (SDN), in my humble opinion.

Perhaps this is an avenue the ONF (Open Networking Foundation) will explore in their journey to build and define SDN interfaces?

Once a controller and message bus is introduced into the architecture for SDN, the foundation is there to take the next larger leap into full OpenFlow, if it makes sense. A smaller subset of flows could be exposed to OpenFlow control for testing and experimentation, and moving gradually towards full OpenFlow control for all traffic if desired. Indeed we may find that granular OpenFlow processing and message bus scale is a non-issue in massive internet data centers.

A least for now, as you can see, I have a lot more questions than I have answers or hype. But one thing is for sure: things are changing fast, and it appears software defined networking, in one form or another, is the way forward.

Exciting times ahead.

Cheers, Brad