Inverse Virtualization for Internet Scale Applications

The word virtualization is one of those words that can mean a lot of things, sorta like cloud.

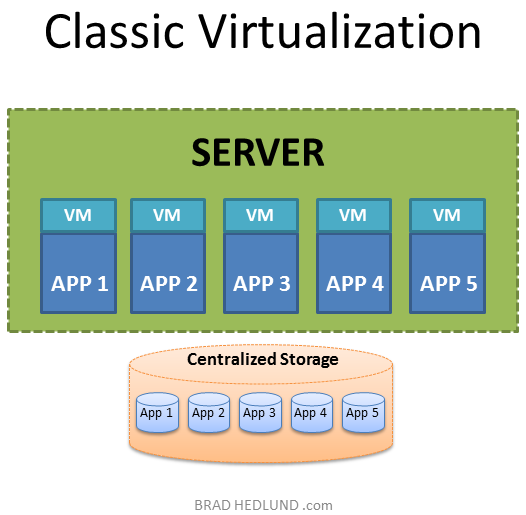

When people think of server virtualization today they often think of it in terms of taking a physical server and having it host many virtual servers each with their own operating system and application (One to Many). Many applications running on one physical machine. This model is enabled by virtualization software (e.g. VMware, KVM, Hyper-V), and perpetuates the classic application development model of One Application to One Server into a much more efficient infrastructure comprised of fewer physical servers. One Server to Many Applications. As a nice side effect, the virtualization software also enabled the classic application model to gain high availability and agility often missing before. I’ll refer to this as Classic Virtualization.

Therefore, server virtualization is a slam dunk, right? Who in their right mind would not do that? Well, to the shock and surprise of many, Facebook recently said they where not using “server virtualization”, and instead planned on buying more servers, not less <GASP!>. This made headlines at Computer World today as Facebook nixes virtualization.

The shock and surprise, I believe, comes from the fact that Facebook (being an internet scale application provider) has an entirely different application development model from the typical IT organization that most people don’t understand. Facebook, like Yahoo, Google, Apple iTunes, Twitter, etc. is providing just one or only a few applications that must scale to a global demand. This is very different from the typical IT data center supporting hundreds of applications for a much smaller corporate community. Rather than the classic IT application model of One Application to One Server (for each of the hundred apps), the large internet providers like Facebook have a development model of One Application to Many Servers.

The fact is, Facebook has implemented server virtualization at the application level. Many Servers to One Application. This is another form of server virtualization common in the massively scalable internet data center. Each cheap little low-power server is just an insignificant worker node in the grand scheme of things. I’ll refer to this as Inverse Virtualization.

Another interesting observation here is the paradigm shift with storage from the typical IT data center. In the classic IT virtualization model, operating system and application data from each of the hunderds of virtualized servers is encapsulated and stored on a big central pool of storage with hardware from EMC, NetApp, Hitachi, etc. With the internet scale data center this is completely different. Remember each insignificant server is a worker node providing a small slice of client processing for one big application. The same can be true for storage. Each insignificant server also has a cheap desktop class hard drive that provides a small slice of storage to one massive logical pool of distributed storage. See for example OpenStack Swift, or Hadoop Distributed File System (HDFS), Google File System (GFS).

Why cheap low power servers for “Inverse Virtualization”? As Facebook points out, a bigger server doesn’t necessarily provide a faster experience for the consumer. A bigger server could process more concurrent consumers on one physical machine, however should that server fail, or the rack it’s located in, that’s a much larger swath of affected users. Keeping service levels consistent despite all types of failures (occurring on a daily or hourly basis in such large data centers) is very important to the application provider. Moreover, a bigger server doesn’t provide a better bang for the added equipment and power buck. This is not something unique to Facebook. Other internet application providers are coming to the same conclusion, such as Microsoft.

Microsoft presented the following data at the Linely Data Center conference earlier this year, which shows that lower power Intel processors provide better performance when considering system cost and power requirements:

The natural conclusion from this is that with Inverse Virtualization it makes sense to build out large scale-out farms of insignificant low power servers to achieve better overall performance with lower power requirements and lower cost. Furthermore, less risk is placed on each server to better absorb failures at the server or rack level, while keeping the overall user experience as consistent as possible.

Facebook didn’t really “nix” server virtualization. I beg to differ. Facebook, like many of the other internet scale application and cloud service providers have been implementing virtualization from the get go, Inverse Virtualization. A virtualization model that arguably results in a more efficient scale out architecture of low power servers - compared to traditional IT server virtualization - if you consider the bigger picture of Performance vs. Cost vs. Power.

Cheers,

Brad