Cisco UCS Fabric Failover: Slam Dunk? or So What?

Fabric Failover is a unique capability found only in Cisco UCS that allows a server adapter to have a highly available connection to two redundant network switches without any NIC teaming drivers or any NIC failover configuration required in the OS, hypervisor, or virtual machine. In this article we will take a brief look at some common use cases for Fabric Failover and the UCS Manager software versions that support each implementation.

With Fabric Failover, the intelligent network provides the server adapter with a virtual cable that (because its virtual) can be quickly and automatically moved from one upstream switch to another. Furthermore, the upstream switch that this virtual cable connects to doesn’t have to be the first switch inside a blade chassis, rather it can be extended through a “fabric extender” and connected to a common system wide upstream switch (in UCS this would be the fabric interconnect). Shown in the diagram below.

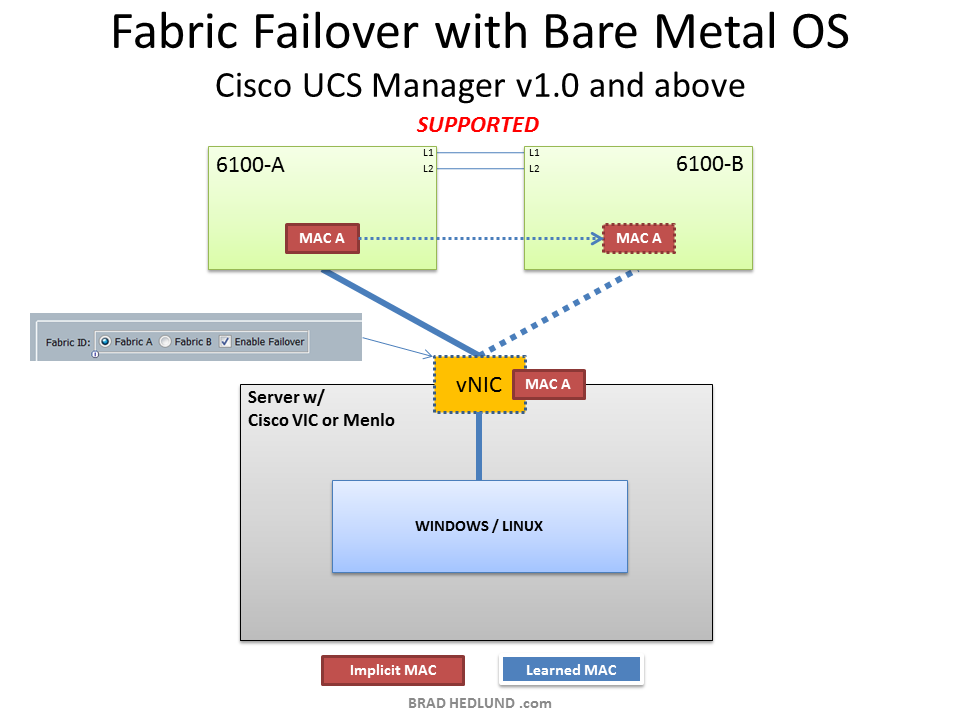

When the intelligent network moves a virtual cable from one physical switch to another, the virtual cable is moved to an identically configured virtual Ethernet port with the exact same numerical interface identifier. Additionally, we should also consider other state information that should move along with the virtual cable, such as the adapters network MAC address. In Cisco UCS, the adapters network MAC address is implicitly known (meaning, it does not need to be learned) because the adapter MAC address and network port it connects to were both provisioned by UCS Manager. When Fabric Failover is enabled, the implicit MAC address of the adapter is synchronized with the second fabric switch in preparation for a failure. This capability has been present since UCS Manager version 1.0. Below is an example of a Windows or Linux OS loaded on the bare metal server with Fabric Failover enabled. The OS has a simple redundant connection to the network with a single adapter and no requirement for a NIC Teaming configuration.

In addition to synchronization of the implicit MAC, there may be other information we may need to migrate depending on our implementation. For example, if our server is running a hypervisor it may be hosting many virtual machines behind a software switch, with each VM having its own MAC address, and each VM using the same server adapter and virtual cable for connectivity outside of the server. When the virtual machines are connected to a software based hypervisor switch, the MAC addresses from the individual virtual machines are not implicitly known because the VM MAC was provisioned by vCenter, not UCS Manager. As a result, each VM MAC will need to be learned by the virtual Ethernet port on the upstream switch (UCS Fabric Interconnect). If a fabric failure should occur we would want these learned MAC addresses to be ready on the second fabric as well. This capability of synchronizing the learned MAC address between fabrics is not currently available in UCS Manager version 1.3, rather this capability is planned in UCSM version 1.4.

The diagram below shows the use of Fabric Failover in conjunction with a hypervisor switch, running UCS Manager v1.3 or below. As you can see, the implicit MAC of the NIC used by hypervisor is in sync, but the more important learned MACs of the virtual machines and Service Console is not. Hence, in a failed fabric scenario the 6100-B would need to re-learn these MAC addresses, which could take an unpredictable amount of time, depending on how long it takes for these end points to send another packet (preferably a packet that travels to the upstream L3 switch for full bi-directional convergence). Therefore, the design combination shown below is not supported in UCSM version 1.3 or below.

With the release of UCS Manager v1.4, support will be added for synchronizing not only the implicit MACs, but also any learned MACs. This will enable the flexibility to use Fabric Failover in any virtualization deployment scenario, such as when a hypervisor switch is present. Should a fabric failure occur, all affected MACs will already be available on the second fabric, and the failure event will trigger the second UCS Fabric Interconnect to send Gratuitous ARPs upstream to aid in the upstream networks fast re-learning of the new location to reach the affected MACs. The Fabric Failover implementation with a hypervisor switch and UCSM v1.4 is shown in the diagram below.

Note: With the hypervisor switch examples shown above, it would be certainly possible to provide a redundant connection to the hypervisor management console with a single adapter. However, some hypervisors, most notably VMware ESX, will complain and bombard you with warnings you that you only have a single adapter provisioned to the management network and therefore no redundancy. This is perfectly understandable because the hypervisor has no awareness of the Fabric Failover services provisioned to it. After all, that is the goal of Fabric Failover, to be transparent. Furthermore, if you wish to use hypervisor switch NIC load sharing mechanisms such as VMware’s IP Hash or Load Based Teaming, or Nexus 1000V’s MAC Pinning or vPC Host-Mode, these technologies fundamentally require multiple adapters provisioned to the Port Group or Port Profile to operate and inherently provide redundancy themselves. In a nutshell, Fabric Failover with a hypervisor switch is certainly an intriguing design choice, but it may not be a slam dunk in every situation.

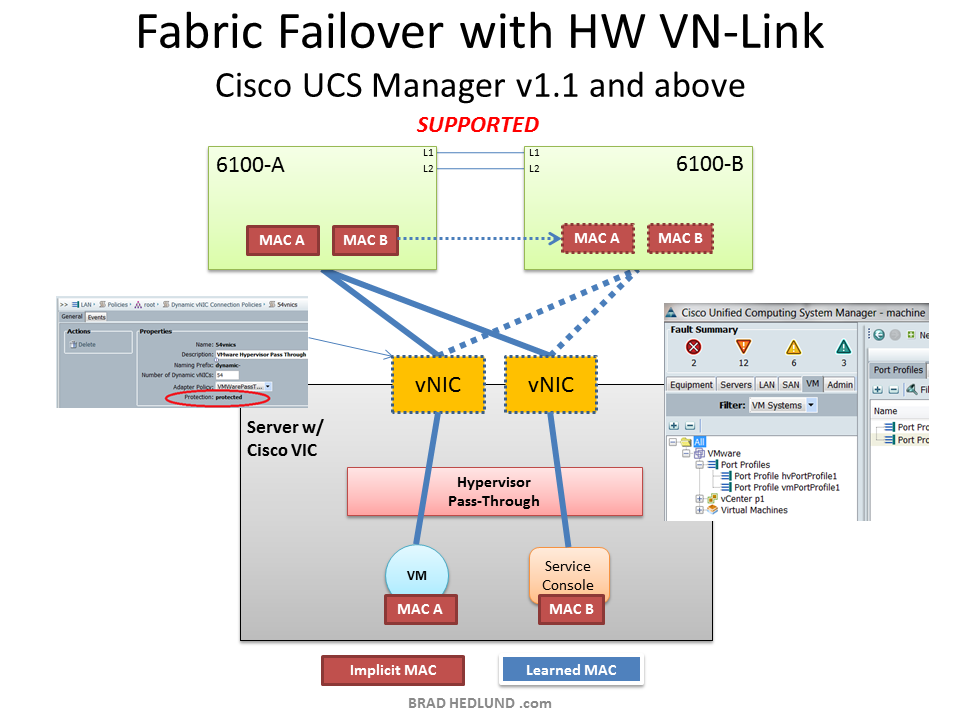

There is one very intriguing virtualization based design where Fabric Failover IS a slam dunk every time, that being Hypervisor Passthrough or Hypervisor Bypass designs capable in Cisco UCS (commonly known as HW VN-Link). When Cisco UCS is configured for HW VN-link it presents itself to VMware vCenter as a distributed virtual switch (DVS), much like the Nexus 1000V does. Furthermore, Cisco UCS will dynamically provision virtual adapters in the Cisco VIC for each VM as they are powered on or moved to a host server. These virtual adapters are basically a hardware implementation of a DVS port. One method of getting the VM connected to its hardware DVS port is by passing its packets through the hypervisor with a software implementation of a pass through module (rather than a switch). The software pass through module requires fewer CPU cycles than a software switch, yet the hypervisor still has visibility to network I/O and therefore vMotion works as expected. This unparalleled integration with VMware has been in UCS Manager since version 1.1.

So where does Fabric Failover have a slam dunk role? Simply put, Fabric Failover provides a redundant network connection to the VM without the need to provision a second NIC in the VM settings. Most VM’s are provisioned with 1 NIC simply because the hypervisor switch provided the redundancy. With the hypervisor switch gone, Fabric Failover provides the redundancy to the VM without requiring any changes to the VM configuration. This makes it very easy to consider UCS HW VN-Link as a design choice, because minimal changes afford an easy migration.

In a design with hypervisor pass-through (HW VN-Link), every MAC address is implicitly known, including those of the virtual machines. This is the result of the programmatic API connection between Cisco UCS and vCenter. Every time a new VM is provisioned in vCenter, and powered on in the DVS, Cisco UCS knows about it, including the MAC address provisioned to the VM. Therefore, the MAC addresses are implicitly known through a programming interface and assigned to a unique virtual Ethernet port for every VM. Because UCS Fabric Failover has worked with implicit MACs since version 1.0, there are no caveats as discussed above in the hypervisor switch designs.

The diagram below depicts Fabric Failover in conjunction with hypervisor pass-through.

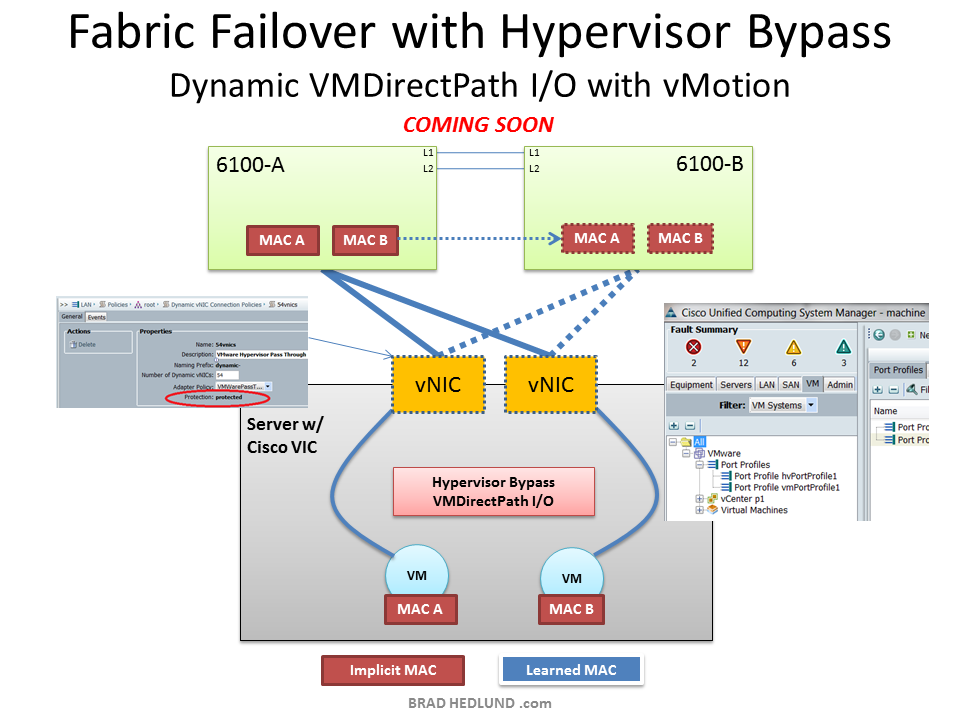

Below is a variation of HW VN-Link that uses a complete hypervisor bypass approach, rather than pass through shown above. This approach requires no CPU cycles to get the VM’s I/O to the network because the VM’s network driver writes directly to the physical adapter. Given that network I/O is completely bypassing all hypervisor layers there is an immediate benefit in throughput, lower latency, and fewer (if any) CPU cycles required for networking. The challenge with this approach is getting vMotion to work. Ed Bugnion (CTO of Cisco’s SAVBU) presented a solution to this challenge at VMworld 2010 in which he showed temporarily moving the VM to pass-through mode for the duration of the vMotion, then back to bypass at completion. But I digress.

Again, Fabric Failover has a slam dunk role to play here as well. Rather than retooling every VM with a second NIC (just for the sake of redundancy), Fabric Failover will take care it :-)

Fabric Failover is so unique to Cisco UCS that in order for HP, IBM, or Dell to implement the same capability would require a fundamental overhaul of their architecture into a more combined network + compute integrated system like that of UCS.

Without Fabric Failover, how are the other compute vendors going to offer a hypervisor pass-through or bypass design choice without asking you to retool all of your VM’s with a second NIC?

Even if you did add a second NIC to every VM, how are you going to insure consistency? How will you know for sure that each VM has a second NIC for redundancy, and the second NIC is properly associated to a redundant path?

How long will it be before the others (HP, IBM, Dell) have an adapter that can provision enough virtual adapters to assign one to each VM?

When that adapter is finally available, will the virtual adapters be dynamically provisioned via VMware vCenter? Or will you need to statically configure each adapter? How will vMotion work with statically configured adapters?

Of course none of this is a concern with Cisco UCS, because all of this technology is already there, built in, ready to use. :-) Whether or not you use hypervisor bypass in your design is certainly your choice – but at least with Cisco UCS you had that choice to make!

What do you think? Is Fabric Failover a “Slam Dunk”? Or a “So What”?