VMware 10GE QoS Design Deep Dive with Cisco UCS, Nexus

Last month I wrote a brief article discussing the intelligent QoS capabilities of Cisco UCS in a VMware 10GE scenario, accompanied by some flash animations for visual learners like me. In that article I (intentionally) didn’t get too specific with design details or technical nuance. Rather, I wanted to set the stage first with a basic presentation of what Quality of Service (QoS) is, how its applicable to data center virtualization, and how QoS compares to other less intelligent approaches to bandwidth management.

In this article we build upon the previous and take a closer look, dive a little deeper, and discuss some of the designs that could be implemented with Cisco UCS or Nexus that can provide intelligent QoS in VMware deployments with 10GE networking. We will look at many different scenarios including designs with Cisco UCS using fancy adapters (Cisco VIC), designs with Cisco UCS using more standard adapters (e.g. Emulex or Qlogic), and finally we will also explore designs without Cisco UCS where you may have non-Cisco servers connected to a Cisco Nexus switch infrastructure. The commonality and key enabler in all of the designs discussed here is the intelligent Cisco network.

With the release of vSphere 4.1, one of the major improvements VMware is proud to discuss is in the area of network I/O performance. For example, vMotion has been improved to allow 8 concurrent vMotion flows per Host, up from 2. Furthermore, one individual vMotion flow can reach 8Gbps I/O performance. If you add another vMotion flow you can quickly saturate a 10GE adapter. See this post by Aaron Delp from ePlus who wrote about the potential for network I/O saturation and the need for intelligent QoS, based on the testing of his colleague Don Mann. Keep an eye on Aaron’s blog for more content this subject.

Saturating a 10GE adapter with network bandwidth is not the problem. In fact, that’s a good thing. This means you are making the most of the infrastructure resources and driving higher levels of efficiency. Similar to how higher server CPU utilization is generally considered a good thing, the same goes for network resources. The problem that can arise with saturating a 10GE network adapter is the performance impact on the many different applications using the server & adapter when there is no policy based enforcement of fair bandwidth sharing.

If you just “let it rip” with no governance at all, the biggest bully is going to take away all of the bandwidth from the little guy, or as much as it can. Vmotion traffic is a great example of one of those bandwidth bullies. Its a brute force transfer of lots and lots of large data packets from one Host to another, coming at a fast and frequent rate. The more infrequent and smaller packet size transactional traffic from the Web server taking orders for your online business can easily get stomped and kicked to the bandwidth curb by the big vMotion bullies … now I think we can agree that’s NOT a good thing.

VMware showed that they understand this challenge by introducing a capability in vSphere 4.1 called Network I/O Control (NetIOC), which provides a means for the ESX hypervisor to enforce a fair sharing of bandwidth for network traffic leaving the hypervisor. (Notice I did not say: “leaving the server” or “leaving the adapter”) NetIOC uses the familiar “Shares” concept for scheduling bandwidth similar to how VMware has always scheduled sharing of CPU and Memory resources. The concept of bandwidth “Shares” is also very similar to how intelligent QoS operates in a Cisco network. My esteemed Cisco colleague M. Sean McGee recently wrote about these similarities in an article titled: Great Minds Think Alike - Cisco and VMware Agree on Sharing vs. Limiting

You might be thinking: “Great, problem solved! I’ll use NetIOC and I’m done.” Well, not so fast. (More on that later). A comprehensive solution to bandwidth management is one that is able to coordinate “Shares” of network bandwidth at both the server adapter AND network switch infrastructure, not just one or the other.

Lets go ahead and take a look at some specific examples of 10GE VMware designs that provide the aforementioned coordinated server & network intelligent QoS (bandwidth “Shares”). First, we will start with some examples of Cisco UCS using the Cisco VIC (virtual interface card) as the server adapter. To begin, lets start with a brief overview of the Cisco VIC depicted below.

The Cisco VIC, also known as “Palo”

One of the areas that sets the Cisco VIC apart from other adapters is in its powerful interface virtualization capabilities. With a single physical Cisco VIC adapter you can effectively fool the server into believing it has many adapters installed (currently as many as 58), all being presented from one physical (dual port) 10GE adapter. You will see how we can use this powerful capability as a flexible design tool for intelligent QoS. You can see from the diagram above we have provisioned (10) virtual adpaters; (2) FC HBA’s; and (8) Ethernet NIC’s. Each virtual Ethernet NIC is found as a “vmnic” to the VMware host, and from there we can map different types of traffic to different virtual adapters.

Another area that sets the Cisco VIC apart from other adapters is in its powerful QoS capabilities. The Cisco VIC can provide a fair sharing of bandwidth for all of its virtual adapters, and the policy engine that defines how bandwidth is shared on the Cisco VIC is conveniently centrally defined by the overarching system-wide UCS Manager. Below is a diagram that shows how the Cisco VIC schedules bandwidth for its virtual adapters.

The Cisco VIC “Palo” QoS capabilities

In the example above we again have our (8) virtual Ethernet adapters and (2) virtual Fibre Channel adapters the Cisco VIC is presenting to the VMware host as “real” adapters. The Cisco VIC itself has (8) traffic queues, each based on a COS value (the purple boxes numbered 0 thru 7). COS stands for “Class of Service”, and when used is typically marked on the Ethernet packets as they traverse the network for special treatment at each hop. In this example we have defined each virtual adapter to be associated to a specific COS value (this was centrally configured at the UCS Manager). Therefore, any traffic received from the server by a virtual adapter will be assigned to and marked with a specific COS value and placed into its associated queue for transmission scheduling (only if there is congestion, otherwise mark and immediately send). Another policy that was centrally defined in UCS Manager was setting the minimum percentage of bandwidth that each COS queue will always be entitled to (if there is congestion). For example, vNIC4 and vNIC8 are assigned to COS 5, of which the Cisco VIC will always provide 40% of the 10GE link during periods of congestion. Similarly, each vHBA has 40% guaranteed bandwidth, vNIC1 and VNIC2 are each sharing a 10% bandwidth guarantee, and vNIC3 has it’s own 10%.

With intelligent QoS, the sum of the minimum bandwidths add up to 100%. This is a big difference from other less intelligent approaches that use “Rate-Limiting” or sometimes also called “Shaping” where the sum of maximum bandwidths must not exceed 100% (example: HP Flex-10 / FlexFabric). Sorry, couldn’t resist :-)

If two or more virtual adapters are assigned to the same COS value (such as vNIC1 and vNIC2), the Cisco VIC will provide Round Robin placement of packets from each virtual adapter into the COS queue, to be serviced by the Bandwidth Scheduler. You can also define maximum transmit bandwidth limits for any virtual adapter, such as vNIC1 being limited to 1GE transmit. If you decide to do that, the Cisco VIC will enforce the rate limit prior to any necessary Round Robin servicing.

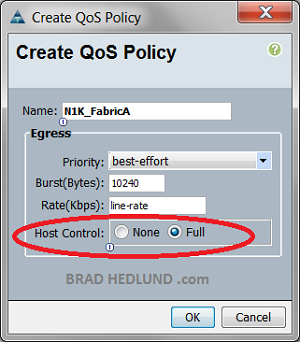

Side note: There are two different QoS modes available for each virtual adapter – 1) Host control NONE, or 2) Host control FULL. The NONE mode is stating that COS markings from the server will be ignored and overwritten with the virtual adapter’s COS setting in UCS Manager. The FULL mode is stating that COS markings from the server will be preserved and used to determine which COS queue each packet is serviced from on the Cisco VIC. The graphic above is a depiction of the NONE mode, where each virtual adapter has a solid black line to a specific COS queue. The FULL mode would have dotted lines from each virtual adapter to each COS queue.

COS 7 is a reserved strict priority queue for UCS Manager to manage the Cisco VIC settings and policies. You can, for example, change bandwidth settings on the fly for a virtual adapter while the server is running without needing to reboot the server!

All FC virtual adapters have “No Drop” service and COS 3 and 50% minimum bandwidth defined by default. You can change the COS value and minimum bandwidth if you like, but you cannot change the “No Drop” setting (why would you do that anyway?). The “No Drop” policy provides a lane for lossless Ethernet normally used by FCoE traffic.

The key takeaway here is that the Cisco VIC is capable of enforcing a centrally managed bandwidth policy for up to 8 different traffic classes enforced on the adapter for any traffic transmitted by the server. The bandwidth policy set in UCS Manager defines the minimum bandwidth available to each Class of Service during periods of congestion, and it can also set maximum bandwidths on a per virtual adapter basis. Furthermore, the Cisco VIC bandwidth management is able to account for ALL traffic using the adapter, including FCoE, something VMware Network I/O Control is lacking. (More on that in a future article).

As if that wasn’t enough, the very same policy in UCS Manager that defines how the Cisco VIC manages bandwidth is the very same policy that defines how the network fabric will manage bandwidth when transmitting to the servers! This provides an unmatched level of server + network integrated bandwidth management from a single policy engine, UCS Manager.

OK, now that we have established the comprehensive Server + Network bandwidth management of Cisco UCS, and the Cisco VIC (Palo) unique capabilities of presenting the server many different virtual adapters, as well as providing each virtual adapter a guaranteed minimum bandwidth, lets see how we can use this powerful combination in (4) Cisco UCS + VMware 10GE design examples with intelligent QoS.

Before we get started with the designs, I am going to first categorize possible traffic types on a typical VMware host into two main categories: Required & Optional.

Required traffic: VM Data (guest virtual machines), Management (service console / vmkernel), (1) Means of central access to storage (FCoE, NFS, iSCSI, etc.), and finally: vMotion (DRS) … I know, I know, some of you will say “vMotion isn’t required!”. For the context of this discussion, it is. Deal with it :-)

Optional traffic: (1) or more additional means of central storage access (e.g. NFS datastores), Fault Tolerance, Real Time traffic (voice/video, market data).

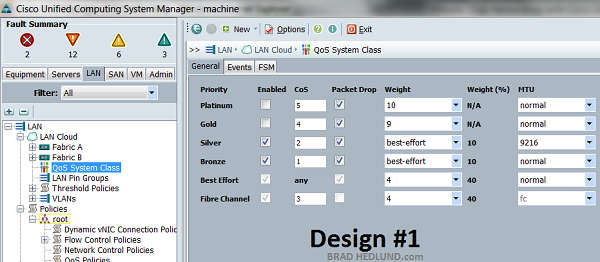

The first design example (Design #1) is going to account for only the Required traffic. We are using Cisco UCS (either rackmount or blade servers, or both) with the Cisco VIC adapter installed. On the vSphere host we are using the standard vSwitch or the vDistributed Switch (vDS).

Design #1 – Cisco UCS + VIC QoS Design, Required traffic only

Design #1 above starts out with just the Required traffic profile. We have Management, VM Data, vMotion, and block based central storage access via FCoE. We are using Cisco UCS rack mount or blade servers with the Cisco VIC installed and presenting the VMware host with (8) virtual adapters; (2) FC HBA’s; and (6) 10GE Ethernet NICs. Furthermore, the VMware hypervisor is running either the standard vSwitch or vDS. There are (3) Port Groups defined; Management, VM Data, and vMotion. Each Port Group has its own pair of virtual adapters.

It’s important to observe that each virtual adapter has its own virtual Ethernet port on the UCS Fabric Interconnect, there is a 1:1 correlation between virtual adapter and virtual network interface. The cable connecting the virtual adapter to its virtual ethernet interface is a virtual cable in the form of a VN-Tag. This 1:1 correlation is import because the virtual network interface on the Fabric Interconnect will inherit the exact same minimum bandwidth policy as the virtual adapter it is assigned to.

Side note: Because the vSwitch or vDS is not capable of intelligently classifying and marking traffic with COS settings, we are using the mode “Host Control NONE” on these virtual adapters and allowing the Cisco VIC to apply the markings depending on which virtual adapter services the traffic.

Each virtual adapter in a pair is associated to a different fabric for the obvious reasons of redundancy, and the Port Group using the adapter pair is configured for Active/Standby teaming. As shown in the diagram we can also alternate whether a Port Group is actively using Fabric A or Fabric B. This is an important design tool we will use for deterministic traffic placement in a shared bandwidth environment.

Each virtual adapter pair is assigned to a different Class of Service (COS) within UCS Manager, of which there are Six to choose from: Fibre Channel, Best Effort, Bronze, Silver, Gold, Platinum. Each service level can be assigned to a different COS value (0-6), and more importantly you assign a bandwidth weight to each service level.

In Design example #1 above; the adapters used by the MGMT Port Group have been assigned a minimum bandwidth of 10% (and transmit rate limited to 1GE), the virtual adapters for VM Data have been assigned a minimum of 40% (no max), the vMotion virtual adapters have been assigned a 10% minimum bandwidth (no max). The Fibre Channel class was adjusted from its default of 50% to 40% (no max). The minimums add up to 100% (per Fabric). The upstream network fabric is also applying the same bandwidth policy when transmitting traffic to the servers. I dont need to be concerned with vMotion traffic received from other servers saturating the link and affecting other important traffic, because the upstream network (Fabric Interconnect) will insure other traffic has fair access to bandwidth based on the same minimum bandwidth policy mentioned above.

For the traffic transmitted from Host to the Network, or Network to Host, vMotion bullies will be quarantined to their 10% of link bandwidth IF there is saturation & congestion. If no congestion exists, vMotion has full access to all 10GE of bandwidth. Furthermore, it is import to observe that vMotion and VM Data traffic have been separated onto different fabrics. Hence under normal operations, when both fabrics are operational, vMotion and VM Data traffic will not be sharing any bandwidth, rather they will each have access to 10GE simultaneously on their respective fabrics. Only under a rare failed fabric condition would VM Data and vMotion traffic share link bandwidth, and only during periods of congestion when one fabric has failed would the intelligent QoS policy need to enforce bandwidth shares between vMotion and VM Data.

Design #2 – Cisco UCS + VIC QoS Design, Required + 1 Optional

Design example #2 above is very similar to Design #1, only now we have added a Port Group for NFS traffic. You may wish to have your VMware hosts boot from an ESX image on the SAN via Fibre Channel, however once the host is fully booted you many want to use NFS data stores for the VM’s to access their virtual disks. To accommodate the NFS traffic we have provisioned two more virtual adapters at the Cisco VIC, for a total of (8) virtual Ethernet adapters. The NFS Port Group is assigned to its own pair of virtual adapters and using Active/Standby teaming.

The Active/Standby teaming configuration of each Port Group is set up such that VM Data will have a fabric to itself while vMotion, NFS, and MGMT share the other fabric.

Because our bandwidth minimums cannot exceed 100% (per fabric), we will make a slight modification from Design #1, where the VM Data Port Group now has 30% minimum bandwidth (rather than 40%). In return the NFS Port Group has been given 10% minimum bandwidth via the “Gold” class of service COS 4. Everything else stays the same. Below is the UCS Manager QoS System Class settings used to set up Design #2. Take special note of the MTU settings for both the Silver and Gold classes.

Once you have defined the QoS System Class settings in UCS Manager (as shown above) you then create a “QoS Policy” and give it a name. You can see in the lower left of the above screen shot that I have created a number of these QoS policies already. One of the policies was named “IP_Storage” which is the one we will assign to the virtual adapters used for NFS traffic. The QoS Policy defines a few things; 1) the name of the policy; 2) the class of service this policy is associated to; 3) any rate limits you might want to enforce; 4) whether or not this policy will overwrite or preserve COS markings from the server (remember: Host control FULL or NONE). Below is a screen shot of the QoS policy “IP_Storage” created for NFS traffic.

After you have created the QoS Policy, you then assign it to the vNIC. You can manually assign the policy to each vNIC of your choice, or better yet, you can do it once with a vNIC Template. The vNIC Template can be used every time you create a new service profile or service profile template. vNIC Templates can also be defined as “Updating Templates”, which means if you decide to make a change to the template (such as VLAN or QoS settings) all servers and adapters created from that template will be updated to match the template.

See the Design #1 section above for a screen shot showing a QoS Policy being assigned to a vNIC Template.

Note: You do not need to create a QoS Policy for the Fibre Channel (FCoE) traffic and assign it to the vHBAs. That is already there by default.

Design #3 – Cisco UCS + VIC QoS, Required + 2 Optional + Realtime

For Design example #3 lets work with a much more difficult scenario where we have the Required traffic, (2) Optional traffic classes, and just for the fun of it (because I like to torture myself) lets throw in Realtime traffic too! Realtime traffic could be a multicast application serving a live video stream, a voice application such as Cisco Unified Communications (caveat*), or perhaps a multicast feed of market data. Whatever it is, lets assume that its important traffic, but as is typical with realtime traffic the payload is not large and bulky, rather a consistent flow of small to mid size traffic where lost packets are noticed immediately by the data consumer.

In Design #3 above we have built upon Design #2 by adding Port Groups and virutal adapter pairs for Fault Tolerance and Realtime traffic. We now have a total of (12) virtual adapters; (10) 10GE Ethernet NICs; and (2) FC HBAs.

Realtime traffic from a voice application, for example, usually expects to have special treatment in the network. It’s customary for network infrastructure engineers to configure special treatment for packets marked with COS 5 in the presence of voice applications. Hence, the network upstream from this Cisco UCS fabric may very well be configured that way, so it makes sense for us to assign that traffic to the “Platinum” COS 5 service level, such that when the traffic leaves Cisco UCS it will be properly marked with COS 5.

Cisco UCS, by default, provides losses Ethernet service for FCoE traffic. In addition to that, there is one more lane of losses Ethernet service available for us to configure and assign to any class of service. In Design #3 we will assign the “Platinum” service to losses Ethernet, thereby preventing any packet loss within the UCS fabric. If we know that this application will be multicast based (such as live streaming video) we can also assign the “Platinum” class with optimized multicast treatment. See below.

The VMware Fault Tolerance traffic has been given its own pair of virtual adapters, assigned to the “Gold” class of service with a minimum bandwidth weighting of 20%. Again we are using Active/Standby adapter teaming to precisely control which traffic types are shared with others during normal operations when both fabrics are operational. Notice that Fault Tolerance and vMotion traffic are together on Fabric B, separated from VM Data traffic on Fabric A.

The Fault Tolerance traffic has been a higher 20% weighting over other traffic (excluding FCoE). This setting is inline with guidance provided by VMware’s Network I/O Control Best Practices document which describes the importance of Fault Tolerance traffic to the performance of VM’s, and hence they recommend giving it a higher weighting.

Another interesting observation to make is that vMotion and MGMT are sharing the same pair of virtual adapters. Remember, I can currently configure Six different traffic classes in Cisco UCS, yet I have 7 classes of traffic to work with in Design #3. So, that leaves me no choice but to assign two different traffic types to the same class of service. I just happened to choose vMotion and MGMT, you may choose something different in your designs, there is no black and white - right or wrong answer. Now, just because two different traffic types are sharing the same class of service doesn’t mean they need to share the same virtual adapters. I could have easily provisioned another pair of virtual adapters just for MGMT.

Design #4 – QoS Design with (2) 10GE adapters

Up to this point we have been looking at designs strictly based on Cisco UCS with the fancy Cisco VIC. Now we will look at another design approach that could be used with Cisco UCS and Cisco VIC, or it could also be implemented with Cisco UCS without the Cisco VIC, or it could be non-Cisco servers connected to a Cisco Nexus 5000 network. Furthermore, up to this point I’m sure the Nexus 1000V product management team has been a little upset that I haven’t mentioned the Nexus 1000V in a single design yet (heh). No offense guys, but one of the main points I wanted to show here is the inherent QoS capabilities built in to the Cisco UCS architecture, with or without Nexus 1000V. Having said that, lets dive into the Nexus 1000V with Design #4 to show its flexible deployment and value in many types of scenarios, Cisco UCS or not. ;-)

In Design #4 we are using the same traffic profile of Required + (2) Optional + Realtime that was used in Design #3. Furthermore, if this is a Cisco UCS deployment, we can use the same UCS Manager QoS System Class settings from Design #3. The main difference here is the fact that our server (either Cisco or non-Cisco) has been provisioned with (2) 10GE adapters visible to the VMware host. Remember from previous designs that we used the Cisco VIC virtual adapter capabilities to mark and schedule traffic. The first step of classifying traffic was done via manual steering of Port Groups to a specific set of virtual adapters.

With the Nexus 1000v you can both classify and mark traffic directly from the hypervisor switch (before it reaches the physical adapter), and with Nexus 1000V version 1.4 you can also provide weighted minimum bandwidth scheduling (Shares) as the packets egress the hypervisor switch, much like VMware Network I/O Control (NetIOC).

With Nexus 1000V we no longer need to provision adapters (virtual or physical) for the sake of QoS classification, as we needed to do in the previous designs with the standard vSwitch or vDS. This allows for a more streamlined configuration for the server administrator who can now provision a VMware Host with (2) adapters, rather than (8) or (12). Furthermore, you have the flexibility to use this approach if you have the Cisco VIC, or with any other standard CNA or 10GE adapter.

For deployments with Cisco UCS, even though we are not leveraging the adapter virtualization capabilities of the Cisco VIC to a great degree, the Cisco VIC still provides an important role in its QoS capabilities. The class of service based bandwidth polices defined in UCS Manager are still enforced right on the adapter. The Nexus 1000V classifies and marks the traffic, and the Cisco VIC will place the packets into one if its (8) COS based queues for scheduled transmission. Remember there are two modes we can define in the Cisco VIC: Host conrol FULL, or Host control NONE. If Design #4 was leveraging the Cisco VIC we would use the “Host control FULL” setting that will tell the Cisco VIC to utilize and preserve the COS marking made by the Nexus 1000V. See the example to the right.

Enforcing a QoS policy on the physical CNA (Cisco VIC) certainly has its advantages, however QoS can still be accomplished (albeit somewhat less holistically) if you have a standard CNA or 10GE adapter that provides no special QoS capabilities. Lets assume you have a CNA adapter (I wont mention names) that does basic first-in-first-out FIFO queuing on the adapter for standard Ethernet traffic. With the Nexus 1000V classifying and marking traffic you can still provide QoS enforcement at the network switch port (Nexus 5000) or UCS Fabric Interconnect. In this scenario we are relying on the fact that the network switch will drop some traffic that has exceeded the policy during periods of congestion. When those packets are dropped, the TCP stack of the server or VM will react to that and throttle back its sending rate. The TCP send rate will gradually increase until a packet is lost again, and the cycle repeats. This results in the TCP based application finding its bandwidth ceiling provided by the QoS policy enforced at the network switch.

The TCP based bandwidth throttling of course only works with TCP flows. If you have a heavily used UDP based application, your switch will still enforce the QoS policy but your sending machine may not react to it. The result is the transmit utilization on the link between your server and switch becoming out of policy and possibly affecting other well behaved TCP applications on that server. This would not happen with an adapter like the Cisco VIC as it can throttle any flows during congestion, TCP or not, right on the adapter before transmitting on the wire.

Hypervisor based QoS

Rather than relying on TCP based throttling, another approach you can use with non-QoS standard adapters is the Nexus 1000V weighted fair queuing (WFQ) (version 1.4) or VMware Network I/O Control. Remember these capabilities are based in the hypervisor switch and can provide Cisco VIC like QoS capabilities in software at the hypervisor level.

Some important caveats with hypervisor based QoS worth discussing:

1) With either VMware NetIOC or Nexus 1000V WFQ, there is no visibility into FCoE traffic utilization on a CNA adapter. The hypervisor switch has no visibility (yet) into the underlying hardware of the CNA to determine FCoE bandwidth utilization at any given moment. This can result in a miscalculation of available bandwidth. The hypervisor switch might believe the full 10GE of bandwidth is not being used and therefore determine no policy needs to be enforced, however once the traffic is delivered to the CNA for transmission it finds a congested link. Therefore, if you are using FCoE you should proceed with caution. These technologies will work better where storage access is on a separate FC adapter or where all Ethernet storage is IP based (iSCSI or NFS) and fully managed by, and visible to, the hypervisor switch.

2) Assuming you are not subject to caveat #1, its important to understand how well this technology will integrate with a broader network wide QoS policy. For example, the Nexus 1000V can apply a bandwidth transmission policy while at the same time marking the traffic for consistent treatment in the upstream network. In contrast VMware Network I/O Control does not mark any traffic. As a result the upstream network must either independently re-classify the traffic or not do anything at all and just “let it rip”. If you do the later “let it rip” approach you will have a situation in which there are no controls in how the network transmits traffic to a server. As a result you can either overwhelm your server with receive traffic and defeat the purpose of implementing NetIOC to begin with. Or, you can implement receive based rate-limiters on every VMware host in fear of excessive traffic and again defeat the purpose of intelligent bandwidth sharing that NetIOC sets out to accomplish.

Bottom line: Hypervisor based QoS should be implemented in coordination with a broader network based QoS policy. In the absence of intelligent QoS capable adapters, hypervisor based QoS can help but its not the complete answer.

Design #5 – QoS Design with (2) 10GE adapters, no Cisco VIC, no Nexus 1000V

Here is the last design we will briefly discuss. Lets imagine you don’t have Cisco UCS - the horror! :-) (just kidding) - Since this could be a standard Cisco C-Series rack mount server you might or might not have the Cisco VIC. And, you don’t have the Nexus 1000V. What can we do in terms of implementing QoS in this scenario? Lets take a look.

Without the Nexus 1000V or Cisco VIC virtual adapters we know that classifying and marking traffic within the hypervisor or at the adapter is not possible. If you have the Cisco VIC installed lets assume for now that we don’t (at the moment) have the capability to provision virtual adapters given this implementation is Nexus 5000 and not UCS (not commenting on the future right now). With a Cisco VIC installed, and without virtual adapters or the Nexus 1000v we wont be able to leverage the (8) COS based queues in an effective way. So at this point it could be the Cisco VIC or any other standard CNA adapter (Emulex or Qlogic) and we have the same design challenges.

In this scenario we need to an intelligent network switch that can classify traffic as traffic enters. Once classified the intelligent switch should be able to provide minimum bandwidth shares to each traffic class when transmitted to the destination. That switch of course would be the Nexus 5000 ;-) The burden of traffic classification and QoS has been entirely put upon the physical network switch.

Understanding that, how will the traffic from the server be managed or react to the QoS policy in the network? Again we have the two choices of hypervisor based QoS or TCP based throttling. If there was very little or no FCoE in use, one solid approach might be to use VMware Network I/O Control, and then try to replicate the NetIOC QoS policy as best you can in the intelligent network switch.

If you have FCoE implemented, as shown in the diagram, the solid approach would be to leave the server configuration as-is, do not enable NetIOC, and let the upstream network switch selectively drop packets during congestion based on its QoS policy. The dropped TCP packets will have the result of TCP based applications finding their defined bandwidth ceiling during the periods of congestion. If you have network intense UDP based applications you may want to experiment with VMware NetIOC, understanding the potential for miscalculations in the presence of FCoE. VMware Network I/O Control will be able to throttle UDP applications based on its policy at the hypervisor level.

Call to Action

It’s important to understand that this article is not at all intended to say “This is how you should do it” to every exact technical detail. My main goal here was to show you some examples of what is merely possible with the technology choices you have. There are a lot of nerd knobs in these designs that could be turned either way.

Having said that, I’m just one man shouting into my little megaphone here. I would really like to hear from some VCDX folks, either employed by VMware or not, to contribute to this discussion. It could be in the comments section below or in your own forum. I’m hoping we can come to agreement on some of the solutions presented here or variations thereof.

Presentation Download

You made it this far? It’s good to know I’m not the only one who’s crazy here.

I would like to thank you for your time and attention! Download a PDF of the original slides, here:

VMware 10GE Design Deep Dive with Cisco UCS, Nexus - PDF

Disclaimer: The author is an employee of Cisco Systems, Inc. The views and opinions expressed by the author do not necessarily represent those of Cisco Systems, Inc. The author is not an official media spokesperson for Cisco Systems, Inc. The designs described in this article are not Cisco official Validated Designs. Please consult your local Cisco representative for design assistance.