Hadoop network design challenge

I had a bit of fun recently working on a hypothetical network design for a large scale Hadoop implementation. A friend of mine mentioned he was responding to a challenging RFP, and when I asked him more about it out of curiosity he sent me these requirements:

(4) Containers In each Container: (25) Racks (64) Hadoop nodes per rack (2) GE per Hadoop node Try to stay under 15KW network power per container Try to minimize cables between containers Non-blocking end-to-end, across all 6400 servers in the (4) containers. Application: Hadoop

Pretty crazy requirements right? Though certainly not at all unimaginable given the explosive growth and popularity of Big Data and Hadoop. Somebody issued an RFP for this design. Somebody out there must be serious about building this.

So I decided to challenge myself with these guiding requirements and after a series of mangled and trashed power point slides (my preferred diagramming tool) I finally sorted things out and arrived at two designs. Throughout the process I questioned the logic of such a large cluster with a requirement for non-blocking end-to-end. After all, Hadoop was designed with awareness of and optimization for an oversubscribed network.

Read my Understanding Hadoop Clusters and the Network post if you haven’t already.

Such a large cluster built for non-blocking end-to-end is going to have a lot of network gear that consumes power, rack space, and costs money. Money that could otherwise be spent on more Hadoop nodes. The one thing that actually makes the cluster useful.

UPDATE: After re-thinking these designs one more time (huge thanks to a sanity check from @x86brandon) and making some changes, I was pleased to see how large a non-blocking cluster I could potentially build with a lot less gear than I had originally thought. Maybe massive non-blocking clusters are not so crazy after all ;-)

With that in mind I built two hypothetical designs just for fun and comparison purposes. The first design meets the non-blocking end-to-end requirement. The second design is 3:1 oversubscribed, which in my opinion makes a little more sense.

Design #1A, Non-blocking (1.06:1)

Here we have (4) containers each with 1600 dual attached Hadoop nodes, with a non-blocking network end-to-end. I’ve chosen the S60 switch for the top of rack because its large buffers are well suited for the incast traffic patterns and large sustained data streams typical of a Hadoop cluster. The S60 has a fully configured max power consumption of 225W. Not bad. I wasn’t able to dig up nominal power, so we’ll just use max for now.

The entire network is constructed as a non-blocking L3 switched network with massive ECMP using either OSPF or BGP. No need to fuss with L2 here at all. The Hadoop nodes do not care at all about having L2 adjacency with each other (Thank God!). Each S60 top of rack switch is an L2/L3 boundary and the (16) servers connected to it are in their own IP subnet.

There will be (4) S60 switches in each rack, each with 32 server connections. Each server will have a 2GE bond to just one S60 (simplicity). Each S60 will have 3 x 10GE uplinks to a leaf tier of (5) Z9000 switches. The leaf tier aggregates the ToR where each Z9000 in this tier will have (60) 10GE links connecting to the S60 swithces, and 16 x 40G links connecting to the next layer up, a Spine of (16) Z9000 switches. The spine provides a non-blocking interconnect for all the racks in this container, as well as a non-blocking feed to the other containers. For power and RU calculations the Z9000 is 2RU with a power consumption of just 800W.

The Z9000’s in the Spine layer will use (20) of their (32) non-blocking 40GE interfaces, leaving room for scalability in the non-blocking design to a possible (6) containers and 9600 2GE nodes in a non-blocking cluster. Not bad!

At (4) containers I came in around 30KW of network power footprint per container. It’s tough to get even close to the 15KW. I’m not sure that’s a realistic number.

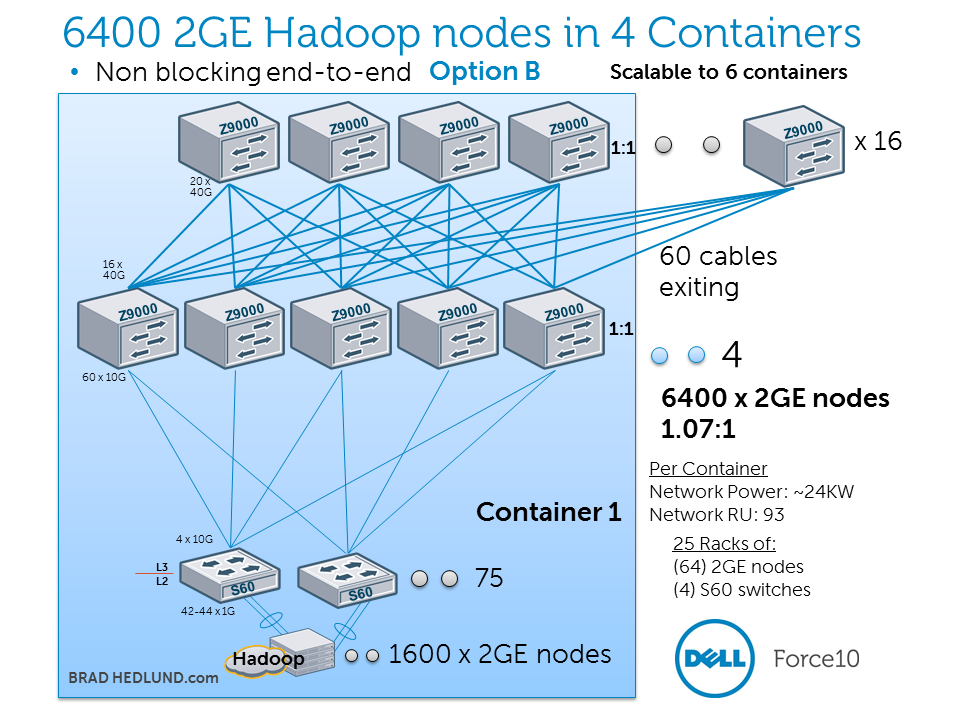

UDATE: This design “Option A” is 1.06:1 oversubscribed – close enough to “non-blocking”. Another option would be to have (3) S60 switches per rack, each with (4) 10GE uplinks. This would increase the oversubscription to 1.07:1 and remove (25) S60 switches from each container, saving almost 6KW in power. Shown below as “Option B”.

Design #1B, Non-blocking (1:07:1)

In a nutshell this design “Option B” reduces the power consumption by almost 6KW, reduces rack space by 25RU, at the expense of increasing the average oversubscription by .01 to 1.07:1. Each of the 25 racks has (3) S60 switches with (4) 10GE uplinks. (2) of the S60 switches in each rack will have 42 server connections. (1) of the S60 switches will have (44) server connections. Not exactly balanced, but if power is a big concern (usually is), the minor imbalance and extra .01 potential blocking may be a small price to pay.

Design #2, 3:1 oversubscribed

I decided to try out a design where each S60 ToR switch is 3:1 oversubscribed and connected to a non-blocking fabric that connects all ToR switches in all the racks, in all the containers. There’s a big reason why I chose to have the oversubscription at the first access switch, and not in the fabric. I wanted to strive for the highest possible entropy, for the goal of flexible workload placement across the cluster. In this design pretty much every server is 3:1 oversubscribed to every other server in the cluster. There’s very little variation to that, which makes it easier to treat the entire network as one big cluster, one big common pool of bandwidth. Just like with the non-blocking design, where you place your workloads in this network makes very little difference.

Each S60 access switch has only (1) 10GE uplink, rather than (3), therefore we have fewer Z9000 switches required to provide a non-blocking fabric. This reduced the power consumed by network gear by about 5KW in each container, and freeing up 22RU of rack space from the previous non-blocking design Option A.

Notice that non-blocking Design #1 Option B has a lower power and RU footprint than this 3:1 oversubscribed Design #2.

UPDATE: I updated Design #2 to use the user port stacking capability of the S60 switch. I’ll stack just (2) switches at a time, thus allowing each Hadoop node’s 2GE bond to have switch diversity. This minimizes the impact to the cluster when the upstream Z9000 fails or loses power.

I’m not calling out the capital costs of the network gear in each design, but suffice to say Design #2 will have lower costs, freeing up capital for more Hadoop nodes and building a larger cluster. Which works out nicely because with the oversubscribed design we’re able to quadruple the possible cluster size to (16) containers and 25600 2GE nodes.

By the way, I’m aware that the current scalability limit of a single Hadoop cluster is about 4000 nodes. Some Hadoop code contributors have said that forthcoming enhancements to Hadoop may increase scalability to over 6000 nodes. And, who’s to say you can’t have multiple Hadoop clusters sharing the same network infrastructure?

Cheers,

Brad

Disclaimer: The author is an employee of Dell, Inc. However, the views and opinions expressed by the author do not necessarily represent those of Dell, Inc. The author is not an official media spokesperson for Dell, Inc.