The vSwitch ILLUSION and DMZ virtualization

Server virtualization has gained tremendous popularity and acceptance to a point now that customers are staring to host virtual machines from differing security zones on the same physical Host machine. Physical servers that were self contained in their own DMZ network environment are now being migrated to a virtual machine resting on a single physical Host server that may be hosting virtual machines for other security zones.

The next immediate challenge with this approach becomes: How do you keep the virtual machines from differing security zones isolated from a network communication perspective? Before we go down that road, lets take a step back and revisit the commonly used network isolation methodologies…

Network Isolation Methodology & Policy

Before DMZ physical servers where migrated to virtual, communication from one DMZ server to another DMZ was steered through a security inspection appliance. Traffic can be steered through a security appliance using physical network separation, or through logical network separation using network virtualization techniques such as VLANs, VRF, MPLS, etc.

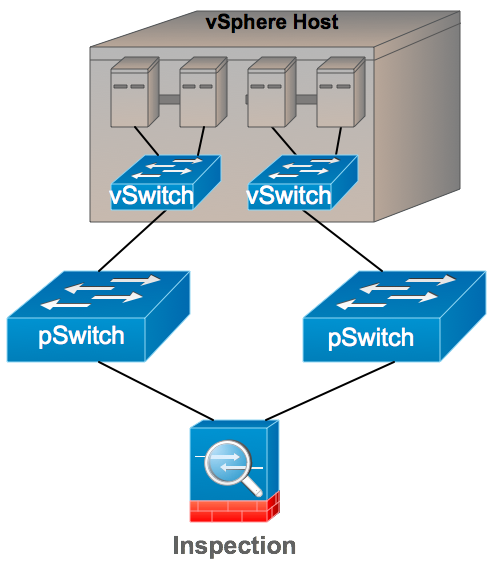

Figure 1 below shows traffic steering through physical network separation.

In Figure 1 above, traffic from between the two groups of servers is steered through the security appliance simply because that is the only physical path by which the communication can take place. Physical separation works under the principal that each server and security appliance interface is correctly cabled to the proper switch and switch port. Isolation is provided by cabling.

The other method commonly used for traffic steering is through a means of logical network separation. In this case, a single switch can be divided into many different logical forwarding partitions. The switch hardware and forwarding logic prevents communications between these partitions. An example of a partition could be a VLAN in a Layer 2 switch, a VRF in a Layer 3 switch, or MPLS VPNs in a broader network of Layer 3 switches and routers.

Figure 2 below shows traffic steering through logical network separation.

In Figure 2 above, traffic between the two groups of servers is steered through the security appliance simply because that is the only forwarding path by which communication can take place. Forwarding paths are created by the configuration of unique logical partitions in the network switch, such as a VLAN. Traffic entering the switch on one partition is contained only to that partition by the switch hardware. The security appliance is attached to both partitions, where as the servers are attached only to the partition in which they belong. The servers are attached to their partition as a result of the switch port configuration. Therefore, separation in this model is provided by switch configuration.

The use of logical or physical separation between DMZ zones might be defined in the IT Security policy. Your security policy may require physical network isolation between security zones. On the other hand, the IT security policy may simply specify that there must be isolation between zones, but without any strict requirement of physical isolation. In such a case logical separation can be used to comply with the general policy of isolation.

Attaching Physical Separation to Logical Separation

When the physical network separation method is attached to a switch using logical separation, an interesting thing happens – you loose all characteristics of physical separation. If you think of physical separation as being the most secure approach (the highest denominator) , and logical as being the lesser secure of the two (the lowest denominator), when the two are attached together the entire network separation policy adopts the lowest common denominator – logical separation.

Figure 3 below shows attaching differing separation policies together. The result is inconsistent policy.

In Figure 3 above, the physical switches that were once adhering to a physical separation policy have now simply become extensions of the what is universally a logical separation method. If your IT security policy specifically requires physical separation, this type of implementation would be considered “Out of Policy” and unacceptable.

Maintaining IT security policy with DMZ server virtualization

Now that we have covered the basics of network isolation and security policy lets circle back to our original question we started off with: How do you keep virtual machines from differing security zones isolated from a network communication perspective? Furthermore, how do we keep DMZ virtualization consistent with IT security policy?

In this article I am going to primarily focus on the IT security policy of physical network separation, and how that maps to server virtualization.

As I have already discussed, once you have set a policy of physical network separation you need to keep that isolation method consistent throughout the entire DMZ, otherwise you will have completely compromised the policy. Most people understand that. So when the time comes to migrate physical servers to virtual, every attempt is made to maintain physical isolation between virtual machines in differing security zones.

Before we can do that, we must first acknowledge and respect the fact that with server virtualization a network is created inside the Host machine, a virtual network. And when that Host machine is attached to the physical network through it’s network adapters, the virtual network on that Host machine becomes an extension of the physical network. And vice versa, the physical network becomes an extension of the virtual network. However you choose to think of it, the virtual and physical network together become one holistic data center network.

With VMware, the virtual network inside the ESX Host machine can be managed by an object called a vSwitch. From the perspective of the VI Client, multiple vSwitches can be created on a single ESX Host machine. This perception provided by VMware of having multiple vSwitches per ESX Host has lead to the conventional thinking that physical network separation can be maintained inside the ESX Host machine. To do this you simply create a unique vSwitch for each security zone, attach virtual machines to their respective vSwitch along with one or more physical network adapters. The physical adapter + vSwitch combination is then attached to a physically separate network switch for that DMZ only. You now have a consistent policy of physical network separation, right? More on that later…

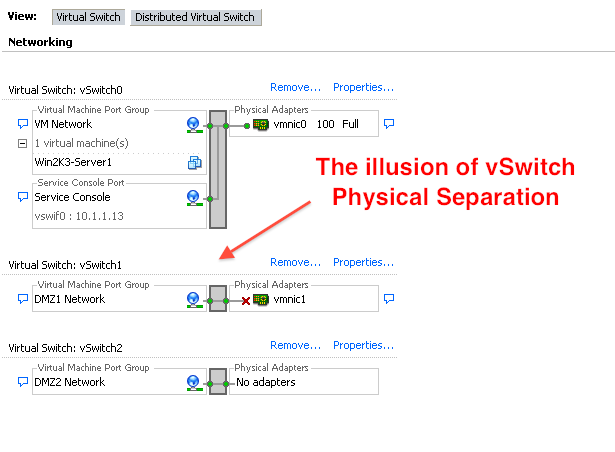

Figure 4 below shows the conventional thinking of vSwitch physical separation.

With VMware vSphere, you also have the option of using the Cisco Nexus 1000V in place of the vSwitch to gain added visibility and security features. However, one thing that some customers notice right away about the Nexus 1000V is that unlike the standard vSwitch, you cannot have multiple Nexus 1000V switches per ESX Host. <GASP!> How am I going to maintain physical separation between DMZ segments if I cant have multiple Nexus 1000V’s per Host? Guess I can’t use the Nexus 1000V, right?

Not necessarily … Paul Fazzone from Cisco Systems, a Product Manager for Nexus 1000V, wrote an excellent article that refutes this thinking titled Two vSwitches are better than 1, right? In this article Paul lays out the case of how Nexus 1000V’s Port Profiles and VLANs provide an equivalent and even better security mechanism to multiple vSwitches and that customers can safely deploy Nexus 1000V in an environment where physical separation is the policy.

The problem I have with Paul Fazzone’s article is that it does not address the fact that two differing separation methods have been attached together, thus creating an inconsistent and lowest common denominator logical separation policy. The Nexus 1000V and its VLANs are a means of logical separation, a single switch containing multiple logical partitions. The minute you attach the Nexus 1000V to the physical network, the holistic data center network is now reverted to a logical separation policy… so … what’s the point in having separate physical switches anymore!?

No offense to Paul, he’s a REALLY BRIGHT guy, and he’s just doing his job of breaking down the obstacles for customers to adopt the Nexus 1000V.

Given that the Nexus 1000V is a single switch per ESX Host using logical partitions in the form of VLAN’s, a customer with a strict physical network separation policy may very well view the Nexus 1000V as not matching to their security model and choose not implement it solely for that reason. In doing so, the customer has sacrificed all of the additional security, troubleshooting, and visibility features of the Nexus 1000V – but to them that doesn’t matter because the ability to have multiple vSwitches is still viewed as a better match for maintaining a consistent physical separation policy.

The vSwitch ILLUSION: What you see isn't what you get

The conventional thinking up to this point has been that multiple vSwitches can be configured on an ESX Host to maintain a consistent architecture of physical network separation. Why would anybody think any differently? After all, when you configure networking on an ESX Host you see multiple vSwitches right before your very eyes that are presented to you as being separate from one another. This visual provides the sense that adding a new separate vSwitch is no different than adding a new separate physical switch, right?

Figure 5 - The vSwitch view from VMware VI Client

First of all, lets ask ourselves this question: What is the unique security posture characteristic of two physically separate switches? Most people would tell you that each physical switch has its own software and unique forwarding control plane. A software bug or security vulnerability in one switch may not affect the other switch because each could be driven by different code. On the other hand, what is the unique security posture characteristic of a single switch with logical partitions? Most people would say that this switch is using a common code and common control plane implementing separation via unique logical partitions.

With that understanding in mind, if I create multiple vSwitches on an ESX Host, each vSwitch should have it’s own unique software that drives it, and a unique control plane that does not require any logical partitioning to separate it from other vSwitches, right? Lets go ahead and put this theory to the test. Lets see how much Host memory is used when there is only 1 vSwitch configured:

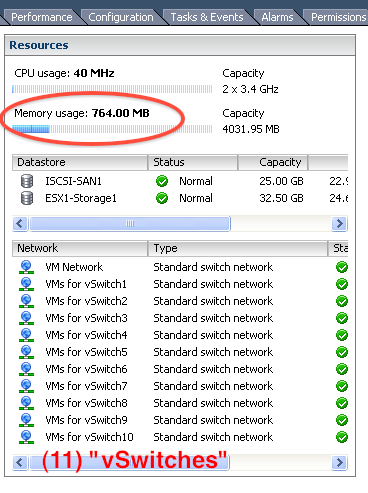

Figure 6 below shows Host memory usage with (1) vSwitch:

In Figure 6 above I have just (1) vSwitch configured on a Host with no virtual machines, and the memory used by the Host is 764MB. Perfect, we now have a memory baseline to proceed. If in fact every new vSwitch on an ESX Host provides the same physical separation characteristics as two separate physical switches, then each new vSwitch should result in a new copy of vSwitch code, consuming more host memory, right? Lets add (10) more vSwitches to this Host and see what happens…

Figure 7 below shows a Host memory usage with (11) vSwitches:

Figure 7 above shows the same ESX Host with (11) vSwitches configured an no virtual machines. As you can see the Host memory usage is still 764MB. Adding (10) vSwitches did not add 1 single MB of Host memory overhead. This is one simple example to show that (11), (20), or even (200) configured vSwitches on a Host is really just 1 Switch, running one piece of common code and control plane, and each new “vSwitch” is nothing more than a new unique logical forwarding partition, no different than a single physical switch with a bunch of VLANs.

Still don’t believe me? Let me go back to Paul Fazzone’s article Two vSwitches are better than 1, right? in which Paul quotes Cisco’s principal software architect of the Nexus 1000V, Mark Bakke, from a video interview in which Mark says:

Each vSwitch is just a data structure saying what ports are connected to it (along with other information).

So while using vSwitches sounds more compartmentalized than VLANs, they provide equivalent separation

- Mark Bakke, Nexus 1000V Principal Software Architect, Cisco Systems

Mark would know better than anybody else, and my Host memory experiment above agrees with him. The conventional thinking that multiple vSwitches are providing physical separation is nothing more than an ILLUSION. The reality for the customer is that having an ESX Host with multiple vSwitches is providing the same security posture as a single switch with logical partitions, same as

Figure 8 below shows the actual REALITY of configuring multiple vSwitches

Figure 8 above shows that attaching a vSphere Host to physically separate networks is counter intuitive.

Consequences of the vSwitch ILLUSIONs

At this point you might be asking me .. “OK, Brad. You made your point. But why are you fighting this battle? If a customer wants enforce a policy of physically separate networks even with the understanding that the vSwitch is not providing the equivalent separation, what’s the harm in that? A little physical separation is better than none, right?”

My answer to that is simple, I have seen on many occasions customers making significant sacrifices in their virtualization architecture, believing they are getting something that they’re really not (physical isolation).

What are the sacrifices and consequences of the vSwitch illusion?

- Many adapters are required in the vSphere Host server to connect to each physically separate network.

- The requirement for many adapters results in purchasing larger servers simply for adapter real estate.

- Many adapters in the server force the customer to use 1GE, and prohibit the use of 10GE adapters.

- The requirement for many adapters force the customer to use rack mount servers, and prohibit the choice of blade servers.

- The forced adoption of 1GE results in I/O bottlenecks that inhibit the scalability of the Host machine, resulting in fewer VM’s per Host, resulting in more Host servers to service any given number of VM’s, resulting in more power/cooling, more network switches, more vSphere licenses, you get the idea… more costly infrastructure for the customer.

- The insistence upon using multiple vSwitches per Host for “separation” prohibits the use the vNetwork Distributed Switches, either the VMware vDS or the Cisco Nexus 1000V. You can only have (1) VMware vDS per Host, and (1) Nexus 1000V per Host.

- Sacrificing vNetwork Distributed Switches results in more management complexity of the virtual network.

- Sacrificing the Nexus 1000V results in giving up valuable security features that would otherwise make what is already a logically separated network more secure. Not to mention the troubleshooting and per VM visibility the Nexus 1000V provides.

Before I continue on I want to let VMware off the hook here. By calling this a “vSwitch ILLUSION” I do not mean to insinuate that VMware has intentionally mislead anybody. That’s not the case at all as I see it. In fact, VMware’s representation of multiple vSwitches was actually a good approach to make the networking aspects of VMware easier for the Server Administrator to understand (their key buyer). Remember, VLANs are a concept that Network Administrators understand very well, but it generally isn’t the Network Administrator who’s purchasing the servers and VMware licenses. So, VMware wanted to make it easy for the Server Administrator, their customer, to understand the networking elements of ESX. While not all Server Administrators understand VLANs and logical separation, most all of them do understand what a switch does, so the representation of multiple switches in the VI Client is a genius way of helping the Server Admin understand network traffic flow on the ESX host without needing a college degree in networking.

Consistency with Logical Separation using Server + Network Virtualization

If the IT security policy does not specifically require physical separation, and now with the understanding that multiple vSwitches is not equivalent to physical separation, then why not have a consistent architecture of logical separation? By combining the power of Server virtualization with Network virtualization you can achieve a secure, highly scalable virtual infrastructure.

Figure 9 below show Server + Network virtualization:

In Figure 9 above the logical separation posture of the vSwitch is complimented by Network Virtualization in the physical network.

A DMZ virtualization architecture with consistent logical separation has the following advantages:

- Fewer physical networks means fewer physical adapters required in the server.

- Fewer adapters required in the server allows for 10GE.

- Fewer adapters required allows for a choice of either rack mount server or blade server.

- 10GE adapters reduces I/O bottlenecks and allows for high VM scalability per Host.

- Rack servers are right sized at 1U or 2U

- Using a single “vSwitch” design on the ESX Host allows for the option to use vNetwork Distributed Switch, or Cisco Nexus 1000V.

- Being able to use the Cisco Nexus 1000V allows for better virtual network security policy and controls.

Consistent Physical Separation using separate physical Hosts

If your IT security policy absolutely states that you must have “physical network separation” between security zones, you can still achieve a consistent separation model that adheres to the policy by deploying physically separate ESX Hosts for each security zone. This is the only way to truly remain in compliance with a strict physical separation policy.

Figure 10 belows shows DMZ Virtualization consistent with physical separation:

In Figure 10 above the IT security policy of physical separation is consistently applied in both the virtual and physical networks.

The DMZ virtualization architecture consistent with physical separation has similar advantages:

- Adherence to a “Physical Isolation” IT security policy

- Fewer physical networks required per server means fewer physical adapters required in each server.

- Fewer adapters required in the server allows for 10GE.

- Fewer adapters required allows for a choice of either rack mount server or blade server.

- 10GE adapters reduces I/O bottlenecks and allows for high VM scalability per Host. Better VM scalability per Host results in fewer servers required, not more.

- Rack servers are right sized at 1U or 2U

- Using a single “vSwitch” design on the ESX Host allows for the option use vNetwork Distributed Switch, or Cisco Nexus 1000V, and all of the management benefits they provide.

- Being able to use the Cisco Nexus 1000V allows for more secure network security features, policy, and controls.

Securing the Virtual Switch for DMZ Virtualization

Whether you choose the physical or logical separation architecture, you still have a virtual switch in each ESX Host that can and should be secured. The standard VMware vSwitch provides some security, but below is an overview of the security features of the Cisco Nexus 1000V that are above and beyond what is available with the standard vSwitch, or standard vDS.

Cisco Nexus 1000V Unique Security Features:

- IP Source Guard

- duplicate IP, Spoofed IP protection

- Private VLANs (source enforced)

- stop denied frames at source host

- minimize IP subnet exhaustion

- DHCP Snooping

- Rouge DHCP server protection

- Dynamic ARP Inspection

- Man-in-the-middle protection

- IP Access List

- filter on TCP bits/flags

- filter TCP/UDP ports

- filter ICMP types/codes

- filter source/dest IP

- MAC Access Lists

- filter on Ethernet frame types

- filter MAC addresses

- Port Security

- spoofed MAC protection

- protect physical network from MAC floods

Securing the Physical Network Switch against Attacks

Similarly to the virtual switch, whether you adopt a consistent physical or logical DMZ virtualization architecture, there is still a physical switch that can and should be secured with some of the following solutions to certain type of attacks:

Attack: MAC overflow. Attacker uses a tool like macof to flood the switch with many random source MACs. The switch MAC table quickly fills to capacity and begins flooding all subsequent frames to all ports like a hub. Attacker can now see traffic that was otherwise not visible.

Solution: Port Security. Switch can limit the number of MAC addresses learned on a switch port thereby preventing this attack

Attack: VLAN Hopping. Attacker send forms an ISL or 1Q trunk port to switch by spoofing DTP messages, getting access to all VLANs. Or attacker can send double tagged 1Q packets to hop from one VLAN to another, sending traffic to a station it would otherwise not be able to reach.

Solution: Best Practice Configuration. Disable auto trunking (DTP) on all ports with switchport nonegotiate. VLAN tag all frames including the native VLAN on all trunk ports with switchport trunk native vlan tag. Use a dedicated VLAN ID for the native vlan on switch-to-switch trunk ports.

Attack: Rouge DHCP server. Attacker spoofs as a DHCP server handing out it’s own IP address as the default gateway. Attacker can now see and copy all traffic from the victim machine, then forward traffic toward the real default gateway so the victim machine is unaware of a problem.

Solution: DHCP Snooping. Switch only allows DHCP responses on ports defined as trusted.

Attack: Spanning Tree Spoofing. Attacker spoofs spanning tree protocol units (BPDU) and forces a network forwarding topology change to either cause distruption or direct traffic in manner that makes it more visible for snooping.

Solution: BPDU Guard, Root Guard. Switch can immediately shuts down host ports sending BPDU’s. The switch can prevent the changing of the Root switch which prevents any topology change.

Conclusion

Logical or physical separation can be used to isolate virtual machines from differing security zones. It’s important to keep the separation policy consistent throughout the physical and virtual network.

The VMware vSwitch does not provide physical isolation. The VMware VI Client provides the presentation of physically separate vSwitches, but this is nothing more than an illusion.

The VMware vSwitch provides logical separation no different than a physical switch with VLANs, or the Nexus 1000V with VLANs.

Customers wrongly accept sacrifices and suffer consequences to their virtualization architecture under the belief they are achieving physical isolation with the standard VMware vSwitch.

The virtual and physical switch can be consistently secured for DMZ virtualization with the Cisco Nexus 1000V and Cisco security features present in physical switches.

Customers benefit the most from DMZ virtualization when a consistent isolation policy is used in both the physical and virtual network.

Presentation Download

Wow! You made it this far? I have a reward for your time and attention!

You can download the 20 slide presentation I developed on this topic here:

Architecting DMZ Virtualization version 1.5, by Brad Hedund

Cheers,

Brad